As you strive to integrate AI technologies more deeply into your workflows, the challenges of maintaining performance, compliance, and ethical responsibility at scale become apparent. AI projects are integral to this process, presenting challenges in building and scaling initiatives while ensuring effective collaboration among teams and alignment with strategic business objectives. These complexities are further compounded by the need to ensure consistent quality and reliability, particularly during version updates and code fixes.

Through in-depth research and analysis, we’ve identified the most pressing challenges to scale AI and machine learning enterprises face today. Among them are:

- Emerging bottlenecks in distributed AI workloads

- Cross-cloud data transfer latency in hybrid environments

- Complexity in AI model versioning and dependency control

- AI governance and compliance across jurisdiction

- Ensuring ethical AI and bias detection at scale

Read on to know how your organization can successfully tackle these issues head-on, optimize AI operations, and apply mastering AI scaling strategies. You’ll find practical strategies and insights that will help future-proof your AI infrastructure and ensure long-term success.

The Importance of Scaling AI

According to the 2024 Google Cloud report, more than 60% of organizations are regularly using generative AI. Among early adopters, 86% of those indicated that their revenue went up 6% with gen AI initiatives deployment. Additionally, 74% of companies are currently seeing a return on investment from their generative AI efforts. As more leaders continue incorporating AI for business operations optimization, the focus shifts to scaling AI projects across the enterprise to bring added value.

Expanding AI enables businesses to harness vast amounts of data, providing the right analysis and predictions to drive informed decision-making. By scaling AI, enterprises can achieve a competitive advantage, staying ahead in an increasingly data-driven market.

In essence, AI scaling is about more than just expanding capabilities; it’s about integrating AI deeply into the fabric of business operations to drive significant business value. As you scale AI, you unlock new opportunities for innovation, efficiency, and growth, positioning your enterprise as a leader in AI-driven solutions.

Now, let’s move on to the issues you may encounter when expanding AI initiatives backed with potential remedies.

Read also: 6 proven approaches to maximize business efficiency with AI

Emerging Challenges of AI Scaling and Solutions

Discover hidden and emerging issues you may face when scaling AI infrastructure, along with potential solutions and ways of improvement.

Emerging bottlenecks in distributed AI workloads

As you scale AI platforms, managing distributed AI workloads becomes increasingly complex, mainly when spread across multi-cloud and hybrid environments. If not resolved, these bottlenecks can undermine AI model efficiency, slow predictions, and cause inconsistencies in production-level deployments.

A recent McKinsey report highlights that by 2030, many companies will be approaching data ubiquity, putting pressure on businesses to create a truly data-based organization. As data becomes more integrated across enterprise systems, AI workloads will increasingly demand real-time processing and decision-making capabilities.

I’m not saying this will apply to you — you may already be positioned for this shift. Still, it’s always wise to keep an ace up your sleeve. Wouldn’t you agree? In such a landscape, you should focus on enhancing latency reduction technologies, such as edge computing and federated learning, to process data closer to its source and ensure seamless, high-performance AI operations.

Thus, scaling AI initiatives should begin by joining data from everywhere — structured, unstructured, real time, and historical — to ensure that AI models operate on reliable and timely information. For companies looking to facilitate the data integration process, having access to data management & analytics service can support the creation of effective data pipelines.

Cross-cloud data transfer latency in hybrid environments

The challenge is particularly relevant for enterprises deploying AI across hybrid and multi-cloud environments. This issue could directly impact enterprise customers who rely on seamless, high-speed processing for use cases such as fraud detection, real-time customer personalization, and predictive maintenance.

In fact, the International Data Corporation (IDC) predicts that by 2025, 70% of enterprises will form strategic partnerships with cloud providers for GenAI platforms, developer tools, and infrastructure. This underscores the growing reliance on multi-cloud environments. However, as multi-cloud adoption accelerates, interoperability and latency between cloud providers are becoming significant pain points, especially for latency-sensitive AI tasks that require real-time data transfer and model execution.

The growing reliance on cloud environments highlights the need for enterprises providing AI solutions to prioritize latency reduction in multi-cloud setups for scalable, real-time performance

In 2024 and beyond, emerging technologies like edge computing, AI-powered network orchestration, and inter-cloud direct connections will become crucial for companies looking to reduce cross-cloud latency and scale AI operations.

Complexity in AI model versioning, dependency control, and data governance

Another concern of AI scaling is managing multiple versions of AI models and their dependencies through effective AI and ML implementation and integration. For enterprise clients using AI/ML tools, improper version control and dependency control can result in model drift, performance degradation, or security vulnerabilities.

The MGit 2024 report introduces advanced practices for AI/ML solution providers to address the stated challenge, especially as AI deployments scale. It was produced by researchers from Microsoft Research and collaborators from Columbia University, Stanford University, and NVIDIA.

Here are some forward-thinking practices AI/ML providers can consider:

- Lineage-based model management. Implementing the lineage-based model described in the MGit could provide a more detailed and scalable framework for handling complex model dependencies.

- Memory deduplication via delta compression. Techniques like content-based hashing and delta compression reduce storage and memory redundancy by efficiently managing models that share parameters or have slight variations.

- Automated cascading model updates. The report presents the ‘run_update_cascade’ feature that automatically updates derivative models when a parent model is modified.

- Collocated model execution for real-time inference. As described in MGit, the collocation of related models during inference can help optimize resource efficiency and improve system performance. It involves sharing layers between similar models to reduce memory and speed up inference.

Adopting these practices could further enhance your AI platforms’ scalability, efficiency, and robustness, particularly in distributed and cloud-based AI workloads.

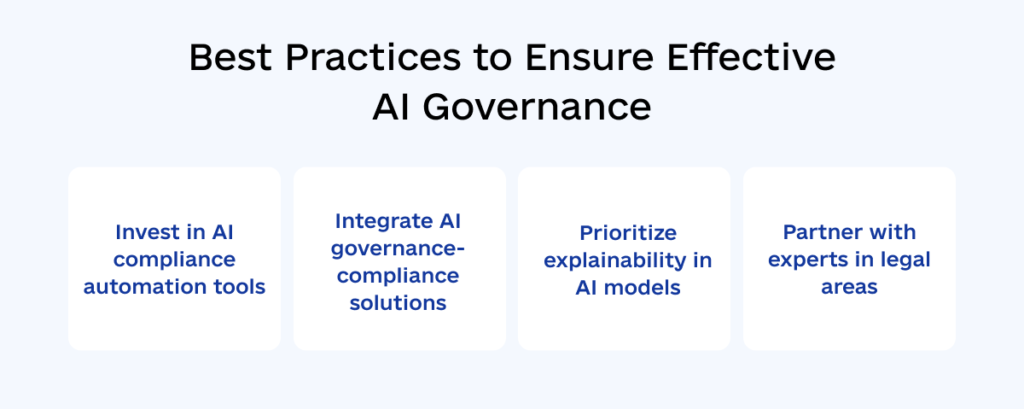

AI governance and compliance across jurisdictions

We all understand that robust AI regulatory compliance is crucial for business success. The real challenge, however, lies in this question: ‘How do we keep pace with rapidly evolving standards and benchmarks as AI capabilities advance?’

Countries like the EU, through the AI Act, and emerging frameworks in the USA (e.g., the AI Risk Management Framework) are setting increasingly stringent standards for data usage, AI ethics, and transparency. The number of AI-related regulations also continues to increase. For instance, the number of AI-related legislations in the USA grew by 56.3%, increasing from 16 in 2022 to 25 in 2023, as highlighted in the AI Index Report 2024. This trend will likely to accelerate further, especially with the ongoing wave of innovation in AI. Failure to comply with these regulations can result in hefty fines, reputational damage, and restricted market access for companies operating in this space.

As you scale AI, the focus must go beyond performance, ensuring intelligent solutions are developed and expanded with ethics and responsibility in mind

Given the solid regulatory landscape, consider building a flexible, modular AI governance framework that adapts to changing regulations. This involves integrating dynamic policy mapping, Explainable AI (XAI), and data localization and encryption to demonstrate transparency and automate compliance checks across jurisdictions.

Ensuring ethical AI and bias detection at scale

One of the primary concerns surrounding AI ethics is the potential for reinforcing existing biases as AI systems scale. Why so? Bias exists on the human level. When you take that bias and apply it to AI, the scale of that bias is exponential due to the enormous volume of data and rapid deployment.

Where are we headed with this? The real challenge lies in balancing AI’s rapid growth with ethical responsibility, ensuring that scaling doesn’t result in an exponential increase in biased outcomes. This is especially pertinent with the upcoming European AI Act, which came into force in August 2024. It establishes a regulatory framework for AI that emphasizes safety, transparency, and respect for fundamental rights while also fostering innovation.

We want to share insights from the 2024 report on ‘Towards Trustworthy AI: A Review of Ethical and Robust Large Language Models (LLMs)’, which highlights strategies and best practices for ethical AI development. While the focus is on the next-gen LLMs, many of the techniques discussed apply to AI engineering as a whole and can help you, as an AI/ML provider, further enhance your approach to creating ethical, transparent, and responsible AI systems.

Here are some practical recommendations based on the report analysis:

- Ethical considerations must be central. Adopting more structured ethical design methodologies, such as value-sensitive design, aligning AI systems with societal values.

- Addressing algorithmic bias. The report underscores the need for continuous improvement. Implementing regular audits and diverse review committees to strengthen bias detection and mitigation.

- Explainability and transparency. The report highlights the importance of Explainable AI (XAI) techniques like integrated gradients and surrogate models to improve the clarity of complex models.

- Accountability in AI systems. Strengthening documentation and internal ethics review processes ensures robust accountability.

- Environmental impact. Creating energy-efficient algorithms and tracking AI’s carbon impact can set a strong example for sustainable AI practices.

- Natural language processing (NLP). Utilizing NLP can help in ensuring accurate responses and improving decision-making processes in AI systems, which is crucial for maintaining ethical standards and reducing bias.

By adopting these best practices, you can strengthen your position as a leader in ethical and transparent AI solutions and further build trust with your global client base.

The final point we’d like to emphasize is the importance of continuously fostering diverse teams and collaborating with strategic technology partners like 8allocate through AI consulting for enterprise-scale programs, who bring fresh perspectives from a range of backgrounds and experiences. Engaging different experts in the AI lifecycle will drive innovation and ensure more inclusive and ethical AI solutions that better serve your clients’ needs.

Assessing and Developing a Strategy for Scaling AI

Assessing and developing a strategy for scaling AI, including building your AI adoption roadmap, is crucial for overcoming the inherent challenges and ensuring successful AI initiatives. Here are some key considerations to guide your strategy:

- Evaluate current AI capabilities. Begin by assessing your existing AI infrastructure and capabilities. Identify the strengths and weaknesses of your current AI systems, and determine the scalability of your AI models and tools.

- Define clear objectives. Establish clear, measurable objectives for your AI scaling efforts. These objectives should align with your overall business goals and provide a roadmap for your AI initiatives.

- Invest in scalable infrastructure. Ensure that your infrastructure can support the increased demands of scaled AI operations. This includes investing in robust data management systems, scalable cloud solutions, and advanced AI tools that can handle large-scale data processing and model training.

- Foster a data-driven culture. Encourage a data-first corporate culture to drive informed decision-making This involves training your workforce to understand and leverage AI insights, and promoting collaboration between data scientists, engineers, and business leaders.

- Implement strong data governance. Effective AI data governance is essential for maintaining data quality and compliance as you scale AI. Establish clear policies and procedures for data management, ensuring that data is accurate, secure, and used ethically.

- Monitor and optimize performance. Regularly monitor the performance of your AI systems and make necessary adjustments to optimize efficiency and effectiveness. This includes tracking key performance indicators (KPIs) and using machine learning operations (MLOps) practices to streamline model deployment and management.

By carefully assessing your current capabilities and developing a comprehensive strategy, you can overcome the challenges of scaling AI and unlock its full potential. This strategic approach will enable you to drive significant business value, achieve your growth objectives, and maintain a competitive edge in the rapidly evolving AI landscape.

Bottom Line

Scaling AI is about more than adding power. It’s about building intelligent, ethical, and efficient systems that can evolve with your business. Remember, each challenge is an opportunity to innovate and optimize your AI infrastructure. As you prepare for the road ahead, it’s crucial to integrate emerging technologies and practices that will enhance your AI performance and ensure compliance and ethical responsibility. By focusing on enhancing operational performance through AI, you can drive both immediate impact and long-term success, positioning your enterprise as a leader in AI-driven solutions

At 8allocate, we specialize in building tailored AI solutions that support global scalability and performance optimization, ensuring your AI infrastructure is equipped to tackle future challenges. Together, we can help your business receive tangible value from implementing responsible and scalable AI solutions. Contact us today to discover how we can collaborate to push the frontiers of AI scalability, making your AI infrastructure more agile, ethical, and future-ready.

Frequently Asked Questions

Quick Guide to Common Questions

Why is scaling AI critical for enterprise success?

Scaling AI enables enterprises to harness vast amounts of data for predictive analytics, automate decision-making, and drive operational efficiency. It enhances competitiveness by integrating AI deeply into business operations, ensuring long-term innovation and growth.

What are the biggest challenges enterprises face when scaling AI?

Key challenges include:

- Bottlenecks in distributed AI workloads – Managing AI across hybrid and multi-cloud environments.

- Cross-cloud data transfer latency – Ensuring real-time AI execution across cloud platforms.

- AI model versioning and governance – Preventing model drift, dependency issues, and compliance gaps.

- Regulatory and ethical AI concerns – Adapting to evolving AI regulations while maintaining fairness and transparency.

- Bias detection and mitigation at scale – Ensuring AI-driven decisions remain unbiased and ethically aligned.

How can enterprises optimize AI infrastructure for scalability?

- Implement edge computing and federated learning to reduce latency and improve distributed AI processing.

- Optimize multi-cloud architectures with AI-powered network orchestration for seamless data transfers.

- Adopt automated model management to streamline version control and prevent drift.

- Ensure robust data governance with compliance-driven AI frameworks across jurisdictions.

How can AI model governance be improved in large-scale deployments?

AI model governance should include:

- Dynamic policy mapping – Aligning AI operations with changing regulatory standards.

- Explainable AI (XAI) – Ensuring transparency in model decisions.

- Automated model updates – Implementing cascading updates to keep AI models performant and compliant.

What strategies help mitigate AI bias at scale?

- Regular audits and diverse review committees to monitor fairness in AI decision-making.

- Ethical AI frameworks incorporating value-sensitive design and transparency principles.

- Explainability techniques (XAI) to provide clear reasoning for AI-driven outputs.

How can enterprises future-proof their AI strategy?

- Invest in scalable AI infrastructure that supports high-performance computing and data management.

- Foster a data-driven culture to ensure AI adoption aligns with business objectives.

- Adopt MLOps best practices to streamline AI deployment, monitoring, and continuous improvement.

How does 8allocate help enterprises scale AI efficiently and ethically?

8allocate provides custom AI solutions, AI governance frameworks, and infrastructure optimization to help enterprises scale AI operations while ensuring compliance, efficiency, and ethical responsibility.