Today’s most advanced LLM models have pushed the boundaries of what is possible in language translation, yet challenges in global communication, such as cultural nuances or linguistic diversity, still need to be solved. To maintain leadership in AI-driven language processing, you must go beyond current LLM capabilities and invest in custom AI translation and NLP solutions to tackle the next generation of linguistic issues that will define global communication.

Read this article. We will delve into the latest advancements in the LLM space, offering valuable perspectives to those already employing AI translation technology.

What Is LLMs in Language Translation

Large Language Models (LLMs) have revolutionized the field of language translation by enabling machines to understand and generate human language with unprecedented accuracy. These models, a subset of artificial intelligence (AI), employ deep learning techniques to analyze and process vast amounts of text data. By learning the intricate patterns and structures of language, LLMs can produce human-like text and perform a variety of tasks, including language translation, sentiment analysis, and content creation.

The ability of LLMs to generate human language stems from their extensive training on diverse datasets, which allows them to capture the nuances and complexities of human communication. This has led to significant advancements in AI-powered language translation, making it possible to translate languages with a level of fluency and accuracy that was previously unattainable. As a result, LLMs are now at the forefront of natural language processing (NLP) and are driving innovation in the field of language translation.

Technical Foundations of LLMs

LLMs are built on the foundation of transformer models, a type of neural network specifically designed for handling sequential data. The transformer model employs self-attention mechanisms, which enable it to weigh the importance of different words in a sentence. This allows the model to capture complex nuances and dependencies in language, ensuring that the generated text is coherent and contextually relevant.

The self-attention mechanism is a key component of the transformer model, as it allows the model to focus on different parts of the input sequence when generating each word in the output. This enables LLMs to understand the context and relationships between words, leading to more accurate and fluent translations. By using these advanced deep learning techniques, LLMs can produce human-like text that closely mirrors the intricacies of human language.

Latest Areas in Large Language Models Development

In 2024, the development of specialized LLMs saw several advancements that companies that are already deeply embedded in the LLM space, might find novel and useful. Below are some of the latest trends and innovations.

Neuro-symbolic LLMs

While traditional LLMs are good at generating fluent and grammatically correct sentences, they often struggle with tasks that require human-like reasoning.

Neuro-symbolic LLMs address this by combining neural networks with symbolic reasoning, enabling the model to use symbolic rules as part of its decision-making process. This can help handle grammatical structures, disambiguate meanings, and apply linguistic rules more effectively during translation. Ultimately, this allows the model to understand the literal meaning of concepts better, leading to higher fidelity in communication.

By adopting neuro-symbolic LLMs, companies that rely on AI-powered tools can improve meaning accuracy across specialized domains, like legal, medical, and technical languages, where precise vocabulary and contextual understanding are crucial.

Cross-domain adaptability and transfer learning

Traditional models often struggle to generalize well across domains (e.g., medical, legal, technical) because they are typically trained on data from a specific domain. Cross-domain adaptability refers to an LLM’s ability to be trained on diverse datasets encompassing a wide array of domains. This training allows the models to generate contextually appropriate responses in diverse scenarios, thereby improving their cross-domain adaptability.

Fine tuning is crucial in optimizing large language models (LLMs) for specific tasks and domains. It involves adapting pre-trained models to specialized applications, balancing task performance enhancement with maintaining general capabilities. Various fine-tuning techniques improve efficiency, reduce overfitting, and allow for customization in resource-constrained environments.

Another challenge in machine translation is knowledge transfer. Training models from scratch for each new domain or task is resource-consuming, especially when labeled data is scarce. LLMs employ transfer learning by leveraging knowledge received from one domain to improve performance in another. Advances in transfer learning, mainly through models like GPT-4, have made training more feasible.

Through these methods, companies that specialize in AI-driven language models can offer machine translation services in new domains quickly and efficiently without extensive data collection and retraining.

Hybrid human-AI language translation models

LLMs possess advanced features like in-context learning, prompt engineering, and symbolic reasoning, which closely resemble human cognitive functions in language tasks. But, while these characteristics make LLMs highly versatile, they still require human intervention to handle cultural sensitivities, emotional undertones, and ethical and responsible use of AI.

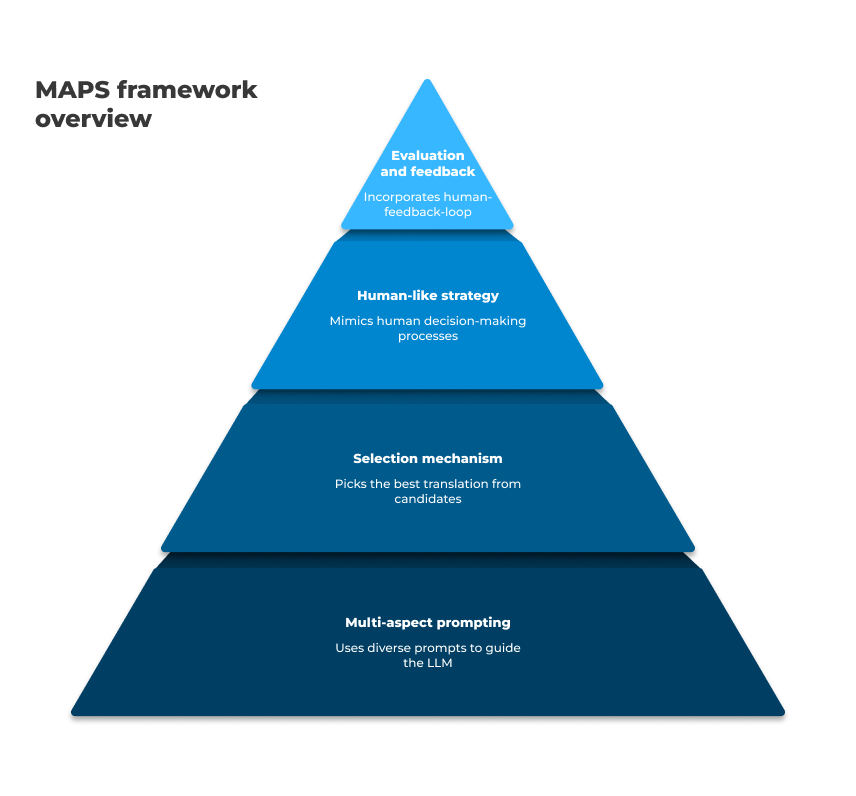

In the March 2024 paper, Zhiwei He, a researcher who focuses on NLP and LLM models, and his colleagues introduced the MAPS (Multi-Aspect Prompting and Selection) framework, which aims to enhance LLM translation capabilities by emulating human translation strategies. This framework effectively improves translation accuracy by mimicking the human decision-making process.

New hybrid models combine AI’s efficiency with human translators’ nuanced understanding. Companies implementing AI-based language translation systems within AI/ML implementation and integration services can incorporate these hybrid models into their enterprise offerings, particularly in industries like legal, medical, or literary translation where human oversight is critical.

Multimodal LLMs

Certain translations require a deep understanding of cultural context, which might be conveyed through imagery, gestures, or situational context rather than just words. Many forms of communication involve symbols, signs, or other visual elements that carry meaning and need to be interpreted alongside text.

What’s the point? Traditional text-based translation models may miss the understanding and translation of content that involves text, images, and other media types. Multimodal LLMs combine computer vision and NLP to understand diverse information. Such models process and translate content across different modalities simultaneously, ensuring that the translation is coherent and that the different elements (e.g., spoken words, on-screen text, visual demonstrations) align correctly. This is especially relevant for translating visually rich content, such as advertisements, product descriptions, and instructional materials, where there is a close relationship between text and imagery.

Meta’s LLaVA and SeamlessM4T excel in translating images and text across different languages, achieving significant progress in multilingual content understanding. These models use advanced techniques like cross-modal embeddings and self-attention mechanisms to align text and visual information, reducing errors typically associated with text-only translations.

This trend holds immense promise for global businesses aiming to communicate more effectively in diverse multimedia environments. For example, integrating multimodal LLMs into AI translation platforms represents a key advancement, allowing firms to offer translation services that include text, visual, and auditory content, thus boosting the versatility and impact of their solutions.

Few-shot and zero-shot transfer learning advances

Few-shot and zero-shot transfer learning is relevant in addressing challenges related to limited data for low-resource languages and domain-specific translation.

These models allow LLMs to generalize from minimal training data or even none to perform new tasks, including text translation. For example, the GPT-3 significantly enhances its few-shot learning capabilities, demonstrating strong performance on translation and question-answering tasks with minimal task-specific data.

In many real-world scenarios, gathering and labeling lots of data for every possible concept isn’t feasible, which is why approaches like understanding on-device AI are becoming increasingly relevant for low-latency translation. Implementing few-shot and zero-shot learning models will enable organizations to handle text translation with minimal or no additional labeled data, which in turn will help reduce the costs associated with data labeling and annotation.

There you have it: 5 latest advancements in LLM development to keep up for

- Neuro-symbolic LLMs

- Cross-domain adaptability and transfer learning

- Hybrid human-AI translation models

- Multimodal LLMs

- Few-shot and zero-shot transfer learning advances

These advancements are at the forefront of LLM research today and offer companies specialized in AI-powered translation technology new perspectives and opportunities for innovation, even as you continue to lead the machine translation industry.

Human Language Understanding and LLMs

LLMs have made significant strides in human language understanding, much like conversational AI technology primer, allowing them to comprehend and respond to natural language queries in a contextually relevant manner. This progress is largely due to the extensive training data used to develop these models, which helps them learn the subtleties and nuances of human language. However, the accuracy and reliability of LLMs are heavily dependent on the quality of the training data.

Misinformation or biased data can lead to incorrect or misleading responses, highlighting the importance of carefully curating and refining the training data. Ensuring that LLMs are trained on high-quality, diverse datasets is crucial for producing accurate and reliable results. By prioritizing the quality of training data, developers can enhance the language understanding capabilities of LLMs, enabling them to generate more precise and contextually appropriate translations.

Challenges and Limitations of LLMs in Language Translation

Despite their impressive capabilities, LLMs still face several challenges and limitations in language translation. One of the primary challenges is the lack of common sense reasoning, which can result in incorrect or nonsensical translations. Additionally, LLMs often struggle with idiomatic expressions, colloquialisms, and cultural references, leading to inaccurate or misleading translations.

The quality of the training data also plays a significant role in the performance of LLMs. Biased or low-quality data can negatively impact the accuracy and reliability of the translations produced by these models. Addressing these challenges requires ongoing efforts to improve the training data and enhance the reasoning capabilities of LLMs.

To tackle these limitations, enlist the support of a seasoned technology partner that provides AI for business operations optimization services. Backed by expertise, AI software engineers can further advance the field of AI-powered language translation and improve the overall performance of LLMs.

Evaluation and Benchmarking of LLMs

Evaluating and benchmarking LLMs is essential for assessing their performance and identifying areas for improvement. Several evaluation metrics and benchmarking datasets are available for this purpose, including BLEU, ROUGE, and METEOR. These metrics measure the accuracy and fluency of LLMs’ translations, as well as their ability to capture the nuances and complexities of language.

In addition to automated metrics, human evaluation is crucial for assessing the quality and relevance of LLMs’ translations. Human evaluators can provide insights into the context and coherence of the generated text, ensuring that the translations are not only accurate but also contextually appropriate. By combining automated metrics with human evaluation, developers can gain a comprehensive understanding of LLM performance and make informed decisions to enhance their models.

Summing Up

The advancements highlighted above – from neuro-symbolic reasoning to multimodal understanding – are reshaping what LLMs can do, opening the door to advanced LLM applications that were not feasible just a few years ago. By integrating these cutting-edge developments, enterprises can elevate their AI capabilities to address complex linguistic tasks and communication challenges that standard models would mishandle. The key is not just adopting the latest tech for its own sake, but aligning these innovations with your specific business goals: whether it’s improving the generative AI for business content in marketing, automating customer support across languages, or analyzing domain-specific documents with expert accuracy.

8allocate’s AI engineering team has been at the forefront of implementing such next-gen solutions. We help organizations build AI MVPs and custom LLM solutions tailored to their domain – from consulting on strategy to full-cycle development and integration. Our experience in AI consulting and product development can guide you in selecting the right models, fine-tuning them for your industry, and integrating them seamlessly into your existing systems. With the right partner, even highly regulated or specialized fields can leverage LLM technology confidently and cost-effectively.

Ready to explore these enterprise LLM use cases further? Contact us to discover how we can help design and implement a custom LLM strategy that delivers tangible ROI for your business. By staying ahead of the curve with advanced LLM innovations, your enterprise can transform the way it communicates, operates, and competes on the global stage.

Frequently Asked Questions

Quick Guide to Common Questions

What are some promising enterprise use cases for advanced LLMs?

Advanced LLMs can be applied in many high-impact areas of business. Common enterprise LLM use cases include automated customer support chatbots that understand complex queries, content generation for marketing (blogs, product descriptions) that maintains brand voice, and intelligent document processing (such as summarizing legal contracts or financial reports). Other use cases involve translation services for global teams, coding assistants for developers, and decision-support tools that analyze large knowledge bases. The latest LLM advancements make these applications more accurate and reliable for enterprise deployment.

How can we get started with adopting LLM solutions in our organization?

Begin by identifying a use case where generative AI could solve a clear business problem or improve efficiency. Evaluate whether an off-the-shelf model (like GPT-4 via an API) meets your needs or if a custom LLM solution would add more value (for example, using your proprietary data to fine-tune a model). It’s wise to start with a pilot or AI MVP development project focusing on one application to demonstrate value. Engage stakeholders early, and consider partnering with AI consulting experts who can assess feasibility, help curate training data, and guide a proof-of-concept implementation. Starting small and iterating is key – you can expand LLM adoption once initial success is proven.

What are the integration challenges when implementing LLMs into existing systems?

Integrating LLMs into your current tech stack requires careful planning. One challenge is ensuring data flows smoothly between the LLM and your databases or applications – this may involve using APIs or building middleware. You’ll also need to address deployment concerns, such as selecting appropriate cloud infrastructure or on-premise servers to host the model, and optimizing for latency if the LLM will serve real-time requests. Another consideration is security and access control: LLMs should be integrated in a way that sensitive data is protected (through encryption, anonymization, or private instances). It’s important to work with experienced engineers or solution providers to architect the integration so that the LLM can operate within your systems reliably and securely. Planning for maintenance is also crucial – integrate monitoring tools to track the LLM’s performance and set up workflows for updating the model as your data or requirements evolve.

When should we consider a custom LLM versus an off-the-shelf model?

Off-the-shelf LLMs (such as OpenAI’s GPT series or other pre-trained models) are great for general purposes and fast prototyping. However, if your use case involves highly specialized knowledge, industry-specific jargon, or requires outputs in a very specific style/tone, a custom LLM may be beneficial. Custom LLM development means fine-tuning a model (or training one from scratch in rare cases) on data from your domain, so it learns the context that generic models might not capture. You should consider a custom solution when the performance of a generic model plateaus or makes too many errors in your domain, or when data privacy regulations prevent you from using third-party APIs. While custom models require more investment upfront (for data preparation, training, and engineering), they can deliver superior accuracy and compliance, and they give you more control over updates and features. Many enterprises start with a pre-trained model and graduate to a custom LLM as they gather proprietary data and expertise.

How do we measure the ROI of generative AI projects using LLMs?

Measuring ROI for LLM initiatives involves looking at both tangible and intangible benefits. On the cost side, consider the expenses of model usage (API fees or infrastructure costs), development effort, and any new hires or training required. Then evaluate the gains: for example, time saved (are your teams able to handle more queries or produce content faster?), quality improvements (fewer errors in translations or reports), or increased revenue (perhaps through better customer engagement or faster go-to-market with content). Many companies track metrics like reduction in manual work hours, improvements in customer satisfaction scores, or conversion rates uplift after deploying an LLM-powered feature. It’s also important to account for qualitative ROI – better decision-making insights, enhanced innovation, and competitive advantage gained by using AI. Over a 6-12 month period, these projects should be monitored and compared against their goals. If the benefits outweigh the costs (or if strategic value is achieved, like staying ahead of competitors), then the ROI is justified. Often, a well-implemented LLM solution will pay for itself by streamlining operations and opening new opportunities that drive business growth.