How can enterprises systematically measure the value created by generative AI? Start with how to measure AI ROI and avoid the common pitfalls that distort returns. Enterprise CTOs and Heads of Product are under pressure to justify ROI from generative AI investments. It’s no longer enough to experiment with AI; leadership needs a clear-eyed strategy to assess impact in concrete terms. This article provides a strategic, research-backed framework to evaluate generative AI’s true value, blending technical insights with business outcomes.

Why Measuring Generative AI’s Value Matters

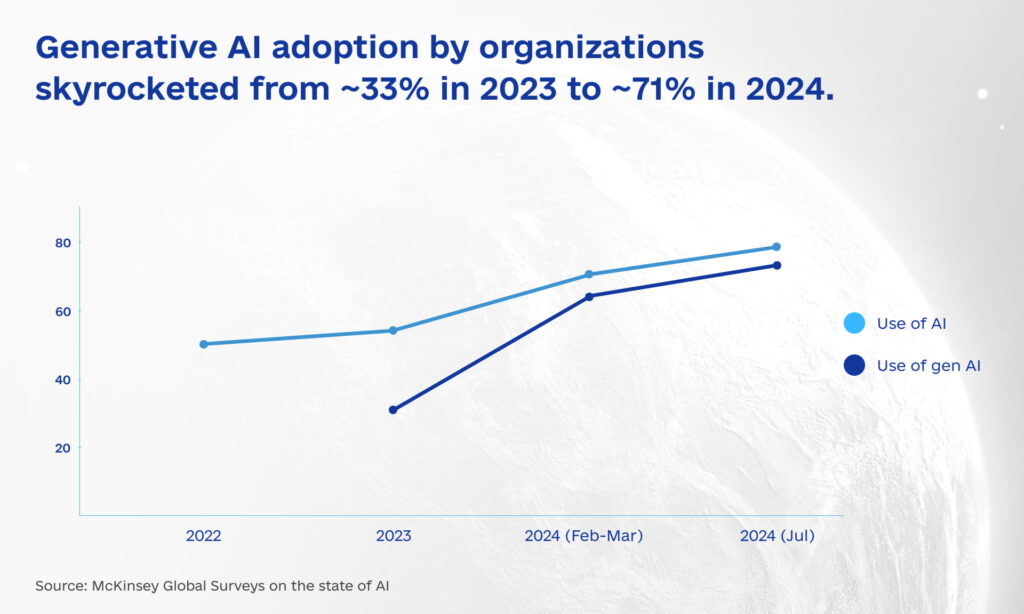

Massive adoption of generative AI is underway. In fact, the share of companies using generative AI jumped from roughly one-third in 2023 to nearly three-quarters in 2024. Figure 1 below illustrates this surge, reflecting how quickly organizations are embracing GenAI tools across functions. This rapid uptake is driven by high hopes – from automating workflows to unlocking new revenue streams – but it also raises an urgent question: Are these AI initiatives genuinely delivering measurable business value, or just riding a trend?

Source: McKinsey

Enterprise leaders must justify significant AI budgets with tangible results. Global generative AI spending is forecast to reach $644 billion in 2025, a 76% increase from 2024. Yet many AI projects struggle to move past pilot stages into fully scaled solutions – creating a “PoC graveyard” where proofs-of-concept fail to translate into business impact. This makes measuring success essential. Decision-makers need robust metrics to distinguish meaningful ROI from mere experimentation. As one survey revealed, less than 20% of companies set well-defined KPIs for generative AI projects – a gap that can leave value on the table. In short, you can’t manage what you don’t measure Establishing how, where, and when generative AI creates value is vital to turning hype into lasting outcomes.

From Hype to ROI: Rethinking Value for Generative AI

Traditional return on investment calculations often fall short for AI initiatives. Unlike a straightforward software deployment, generative AI brings unique characteristics – it learns and improves, produces unbounded outputs, and transforms work processes in subtle ways. Early value from GenAI tends to come from efficiency and productivity gains, rather than immediate revenue. For example, large language model tools can dramatically speed up research, coding, or content creation tasks. Andrew Lo of MIT observes that generative AI “will make existing employees more efficient… an increase in productivity”. A financial analyst assisted by an AI might cover 5–10× more data in the same time, or a developer might code faster with AI pair programming. These time savings improve output quality and speed – creating real value even if they don’t directly show up as new revenue in the short term.

However, focusing only on conventional cost vs. revenue ROI can be misleading in GenAI’s early stages. In fact, initial ROI for generative AI projects may appear neutral or even negative, once you account for upfront investments in infrastructure, talent, and training. Forward-looking leaders understand that the payoff often comes in phases. In the short term, costs may outweigh monetary returns; in the medium term, efficiency gains and enhanced capabilities start yielding cost savings; in the long term, generative AI can enable new products and business models that drive revenue growth. In other words, patience and strategic vision are required to realize AI’s full value. Many organizations are willing to accept a delayed financial ROI because they anticipate transformative benefits down the line – from faster R&D cycles to personalized customer experiences that strengthen loyalty.

Rather than asking “Where are the profits today?” smart leaders ask “What productivity and capability improvements is AI delivering, and how will those translate to competitive advantage over time?” By reframing “value” to include time saved, quality improved, and opportunities unlocked, enterprises get a more accurate picture of generative AI’s real impact.

Key Areas Where Generative AI Delivers Business Value

Generative AI’s value is ultimately realized in real-world use cases. Leading organizations are identifying processes and functions where GenAI can move the needle on performance. Here are some domains where enterprises are already seeing measurable benefits:

- Software Development and IT: One of the strongest immediate ROI drivers has been in the software development lifecycle. AI-powered coding assistants (e.g. GitHub Copilot) help engineers generate and review code faster, reducing development time. According to Deloitte’s analysis, IT teams are reporting significant efficiency gains across the entire process – from requirements gathering to testing and deployment. By accelerating release cycles and catching bugs earlier, AI in development can lower costs and speed up time-to-market – a clear value add.

- Customer Service and Support: GenAI is enhancing customer engagement through advanced chatbots and AI agents that handle routine inquiries. Enterprises are automating call center interactions and support tickets, improving response times and freeing human agents for complex issues. Automating FAQs and initial support with AI cuts costs and often improves customer satisfaction via faster service.

- Knowledge Work and Content Generation: Across marketing, finance, law, and education, generative AI tools are automating content creation and data analysis. For example, marketing teams use GenAI to draft personalized copy, analyze customer data, or even generate designs – increasing output while maintaining quality. These productivity gains allow employees to handle a larger volume of work or focus on higher-value tasks.

- Decision Support and Data Analysis: Generative AI models excel at sifting through vast datasets, uncovering patterns, and generating insights in natural language. This helps executives and managers make data-driven decisions more quickly. For instance, an AI system might analyze sales data and “narrate” key trends or anomalies, giving leaders actionable intelligence without waiting on lengthy manual analysis. By augmenting human decision-making with rapid AI-driven insights, companies can react to market changes faster – a competitive advantage that, while hard to put a dollar value on immediately, clearly contributes to business agility.

Importantly, these value areas often yield indirect or “soft” ROI initially – faster cycle times, better decisions, improved customer sentiment – which eventually translate into “hard” financial outcomes like cost savings and revenue growth. The key is to track those intermediate metrics closely (e.g. reduction in support backlog, faster development sprints, higher customer retention) and connect them to financial results over time. We’ll discuss metrics shortly, but it’s evident that generative AI’s impact is multi-dimensional. Organizations that recognize and measure these various dimensions of value will have the clearest view of ROI.

A Framework for Measuring Generative AI Impact

How can enterprises systematically measure the value created by generative AI? To answer this, it helps to use a structured framework that goes beyond basic dollar ROI. One useful approach, inspired by AI strategists and enterprise frameworks, is to evaluate AI’s impact across five key business pillars: Innovation, Customer Experience, Operational Efficiency, Risk & Responsibility, and Financial Performance. Let’s break these down:

1. Innovation and New Opportunities

Generative AI can be a powerful catalyst for innovation. This pillar looks at how AI opens new products, services, or market opportunities.

Metrics: number of AI-driven pilot projects, speed of moving from idea to prototype, new revenue streams generated from AI-enabled offerings.

For example, if AI allows you to launch a new personalized product line, the framework captures that innovation value (even before it fully reflects in profit). Leading indicator: count of GenAI experiments or prototypes in development. Lagging indicator: revenue or market share from AI-enabled products.

2. Customer Value and Growth

This pillar measures AI’s impact on customer experience and growth. Are your GenAI applications improving customer satisfaction, engagement, or retention?

Metrics: customer satisfaction (CSAT) or Net Promoter Score changes after AI deployment, increase in customer lifetime value, conversion rates for AI-personalized campaigns.

For example, an AI chatbot’s effect might be seen in faster response times and positive feedback (leading), and over time in higher retention or sales from happier customers (lagging). Generative AI’s ability to personalize content at scale often shines here, yielding growth in customer loyalty that translates to revenue.

3. Operational Efficiency and Productivity:

Likely the most immediate source of ROI, this pillar tracks how AI improves internal processes and reduces costs — see AI efficiency for enterprises for productivity-first measurement angles.

Metrics: time saved on key processes, reduction in error rates, output per employee (efficiency per FTE), and direct cost savings from automation — the core outcomes targeted by AI for business operations optimization.

For instance, if an AI document processor automates 70% of a previously manual data entry task, calculate the hours saved and labor cost reduced.

Generative AI’s initial ROI often lives here – in the dozens of small optimizations that together boost productivity across the organization. Track these diligently: e.g. “employees can handle 50% more support tickets per day” or “development teams completed 3 extra projects this quarter thanks to AI assistance.”

4. Responsible Transformation (Risk & Compliance)

AI value must be weighed against risk and implemented responsibly. This pillar assesses how AI adoption is managed in terms of compliance, ethics, and change management. It might not sound like a value metric, but mitigating risks and ensuring smooth cultural adoption protects value.

Metrics: regulatory compliance achieved (yes/no for standards), reduction in error/hallucination incidents, employee training and upskilling rates, user trust scores.

If generative AI is used in a regulated industry (finance, healthcare), its “value” is tightly tied to not creating new risks. Successful AI programs often have high marks in change management: e.g. percentage of workforce trained on AI tools, or explicit processes to handle AI errors. Companies that proactively invest in training and culture change see higher ROI because employees actually use the tools effectively rather than resist them. This pillar might use qualitative checkpoints (e.g. “AI ethics committee in place, no compliance breaches since AI launch”) as part of measuring long-term value preservation.

5. Financial Performance

Finally, the traditional financial outcomes – but viewed in context. This pillar looks at profit and cost impact attributable to AI.

Metrics: revenue growth tied to AI initiatives, profit margin improvement, ROI percentage on AI projects after a given period, and payback period.

Notably, this comes last because it often materializes last. For example, a company might measure that after 18 months, a generative AI system for supply chain optimization led to a 5% reduction in operating costs (a clear financial win). Or a new AI-driven product line generated $X in new revenue. These are the ultimate lagging indicators confirming that all the innovation, efficiency, and improved customer experience are translating into dollars.

By tracking interim benefits in the other pillars, leadership can connect the dots to financial gains instead of waiting passively for ROI to show up. This proactive measurement builds confidence among executives and boards that generative AI is on the right track, even if direct profit takes time.

Using a multi-pillar value framework ensures that value isn’t viewed narrowly. Generative AI’s impact can be diffuse – a little boost here, a new capability there – but when aggregated across the business, it can drive significant competitive advantage. By defining metrics in each category (including both leading indicators of future value and lagging indicators of realized value), enterprises create a comprehensive scorecard for their AI programs. This also aids in communication: CTOs and Heads of Product can clearly articulate to CEOs or investors where AI is making a difference (be it faster innovation cycles, happier customers, or leaner operations). In sum, measuring generative AI’s real value means measuring what matters to the business as a whole – not just the IT department’s budget.

Best Practices to Maximize ROI from Generative AI

Measuring value is one side of the coin; realizing that value is the other. Through our research and industry experience, several best practices emerge for enterprises aiming to maximize generative AI’s ROI:

Start with Targeted Use Cases and Small Wins

Rather than a vague “AI transformation,” identify specific, high-impact use cases where generative AI can solve a known pain point. Launch sandbox experiments or pilot projects in these areas. For example, pilot an AI content generator for the marketing team’s newsletter creation, or a GenAI assistant to help the legal team summarize contracts. Keep the initial scope focused and manageable.

Small pilots with clear goals encourage creative problem-solving and minimize risk. Crucially, quick wins build momentum – they produce evidence of value (e.g. “the pilot saved 300 hours of manual work in 2 months”) that can justify scaling up.

This aligns with 8allocate’s approach to AI MVP development – developing a minimal viable AI solution to prove value before heavy investment (an internal service that helps organizations get those early wins).

Align AI Projects to Business Strategy from Day 1

Generative AI initiatives should not exist in a vacuum or solely in the R&D lab. Tie each AI use case to a strategic business objective – whether it’s improving customer retention, speeding up product launches, or reducing compliance costs. This ensures the metrics you track resonate with leadership.

For example, if your company strategy is to penetrate a new market segment, focus your GenAI efforts on that (maybe an AI-driven personalization to attract those customers). By aligning with business goals, you frame AI value in terms executives care about, making it easier to secure support and measure success in business terms.

Engaging in AI consulting services can help at this stage; experts can facilitate strategy workshops to connect AI capabilities with high-value business opportunities (8allocate’s AI consulting team often performs an “AI readiness & value discovery” assessment to prioritize use cases aligned to ROI).

Invest in Change Management and Training

One often underestimated factor in AI ROI is human adoption. Introducing generative AI into workflows fundamentally changes how employees do their jobs. Without proper change management, even a technically successful AI deployment can falter – employees may feel anxious about AI or unsure how to leverage the extra time AI frees up. Prepare the workforce through transparent communication of the AI’s purpose, and provide training to build AI fluency.

For example, if an AI report generator saves analysts 4 hours a week, decide in advance how they can use that time (e.g. focusing on deeper analysis or client interaction). This prevents efficiency gains from turning into wasted idle time. Additionally, involve end-users in the AI project development – their feedback will improve the tool’s usability and their buy-in. Companies that take a “people-first approach” – upskilling staff and addressing cultural barriers – see much smoother AI adoption and faster realization of value.

Account for AI’s Limitations and Manage Risks

Generative AI is powerful but imperfect. Models can hallucinate incorrect information, have bias issues, or struggle with domain-specific data (like precise calculations in finance). A single AI error in a critical process can wipe out trust and value quickly. So, implement guardrails: use human review for AI outputs in high-stakes use cases, put in place quality checks, and start with lower-risk applications before scaling to sensitive ones.

For instance, in a finance department you might use GenAI to draft analysis but require human analysts to verify any numbers. Measure and report on these interventions too – e.g. “95% of AI-generated customer emails required no human correction” indicates the solution is working well, whereas frequent corrections signal a need to retrain the model or narrow its scope. Also, collaborate with compliance and security teams early to tackle regulatory concerns (data privacy, model transparency, etc.). Addressing these limitations head-on prevents costly setbacks and builds stakeholder confidence in the AI. In short, responsible AI practices are not just ethical – they directly impact ROI by avoiding value-eroding mistakes.

Iterate and Scale What Works

ROI measurement should be an ongoing process, not a one-time calculation. Continuously monitor the defined KPIs and be ready to iterate. If a particular generative AI application shows strong results in one department, replicate it in others where applicable. Conversely, if the metrics show underperformance, investigate why – maybe the model needs more training data or users need more training. Many enterprises adopt an agile approach to AI: deploy, measure, learn, adjust, and then scale up successful pilots to enterprise-wide solutions. This way, investment flows to the highest-value areas.

For example, if an AI writing assistant is a hit with the sales team (improving proposal turnaround time and win rates), consider extending it to marketing and customer success teams with necessary customizations. Scaling a proven solution often brings exponential ROI – the upfront R&D cost has been paid, and each new deployment adds value at a lower marginal cost.

By following these best practices, organizations create a virtuous cycle: clear alignment and small wins build confidence, good change management drives adoption, risk controls prevent setbacks, and continuous iteration amplifies value. It’s a recipe to maximize the ROI of generative AI in practice, not just in theory.

Conclusion: Turning Generative AI Hype into Measurable Impact

Generative AI represents a transformational leap in capability – but capturing its real value requires discipline, strategy, and measurement. Enterprise leaders must cut through the noise and focus on outcomes that align with business strategy. By identifying high-impact use cases, defining the right metrics (from efficiency gains to innovation milestones), and fostering adoption through strong change management, organizations can ensure their AI investments yield tangible returns.

Don’t embark on this journey alone if you lack certain expertise. Bringing in external AI consulting or partnering with experienced AI development services can accelerate value realization.

We can help you avoid common pitfalls, identify the highest-ROI opportunities, and build AI solutions that deliver measurable impact. The generative AI revolution is here to stay; tracking generative AI trends helps leaders anticipate how value drivers will evolve and where ROI will concentrate next.

Contact us to discuss how we can turn your AI ambitions into tangible results. Let’s build AI solutions that make business sense and deliver value you can quantify.

FAQ: Measuring Generative AI’s ROI and Business Impact

Quick Guide to Common Questions

How do you measure ROI for a generative AI project?

Start by identifying the specific key performance indicators (KPIs) that align with the project’s goals. These often include efficiency metrics (e.g. time or cost saved by AI automation), output metrics (e.g. more units produced or customers served), quality metrics (e.g. accuracy or satisfaction improvements), and financial metrics (revenue gained or costs reduced). Establish a baseline before AI implementation, then track changes once the generative AI is deployed. For example, if implementing an AI content generator, measure how much faster content is produced and whether engagement metrics improve, then translate those improvements into cost savings or revenue impact. It’s important to measure both short-term proxies of value (like hours saved) and longer-term outcomes (like sales growth), since generative AI ROI may accrue over time.

What are the key metrics for success with generative AI?

Key metrics will vary by use case, but generally: (1) Productivity and Efficiency: time saved, increase in output per employee, reduction in error rates. (2) Financial Impact: cost savings, revenue increases attributable to the AI (for instance, revenue from new AI-driven products or higher sales conversion rates after personalization). (3) Quality and Performance: accuracy of AI outputs (perhaps measured by error counts or human evaluation scores), improvement in quality metrics like customer satisfaction or retention. (4) Adoption and Utilization: percentage of target users actively using the AI tool, usage frequency, and user feedback. (5) Innovation and Speed: number of new ideas/prototypes enabled by AI, faster cycle times (e.g. reduced time to market for a feature due to AI assistance).

How long does it take to see ROI from generative AI initiatives?

It often happens in phases. In the short term (0–6 months), you might see process improvements and time savings – for example, an AI tool immediately automates a manual task, freeing 20 hours per week. However, these early gains might be offset by upfront costs and the learning curve, so pure financial ROI could be neutral. In the medium term (6–18 months), as the AI system scales and users get more proficient, organizations start realizing efficiency gains translating to cost reduction and maybe incremental revenue (e.g. deploying an AI feature to customers that brings in new sales). Many companies report reaching a positive ROI within the first year as these benefits accumulate. In the long term (18+ months), generative AI can enable more transformative outcomes – like new business models or significant market expansion – which drive substantial revenue growth or cost leadership. At this stage, ROI can become very high as the initial costs have been absorbed and the AI continues to deliver value. Patience is warranted as long as leading indicators (productivity, adoption, quality) are positive, signaling that financial returns will follow.

What if my generative AI project isn’t showing clear ROI?

First, diagnose the issue: Is it a measurement problem or an actual performance problem? It could be that the AI is delivering value, but you haven’t defined the right metrics to capture it. Re-examine if you’re tracking the full range of benefits – sometimes the ROI is indirect (e.g. improved customer experience leading to loyalty) and requires a longer-term view or proxy metrics. If you determine the project truly isn’t delivering, analyze why. Common reasons include: low adoption (employees or customers not using the AI as expected, so benefits aren’t realized), poor integration (the AI output isn’t actually improving the workflow due to integration gaps), technical issues or limitations (e.g. the model’s accuracy is too low, causing more work), or misaligned use case (the AI is solving a problem of low business value). Address these accordingly – through better change management and training to boost adoption, improving the technical solution, or refocusing the AI on a more valuable problem. It’s also crucial to engage stakeholders and set realistic expectations. Sometimes ROI isn’t realized because the organization hasn’t adapted its processes to leverage the AI.

How can we ensure our generative AI initiative aligns with business goals?

The alignment starts at project inception. Tie each AI project to a specific business objective or KPI that leadership already cares about. For instance, if your company’s goal is to improve customer retention by 10%, frame and design your generative AI initiative (say, an AI-driven personalized recommendation system) around contributing to that goal. This means involving business stakeholders in defining the AI use case requirements and success criteria. Use frameworks like OKRs (Objectives and Key Results) where the “O” is a business goal and the “KR” is how the AI will help achieve it (e.g. “Implement generative AI chatbot to reduce customer churn by providing 24/7 support, aiming for +5 points in customer satisfaction”). Throughout development and deployment, maintain a cross-functional team – include product owners or business managers along with data scientists. They will ensure the AI’s output is actionable and relevant to business processes. Regularly review the AI initiative in leadership meetings just as you would any strategic project.