Generative AI has taken the world by storm and is here to stay for the long term.

Goldman Sachs Research expects AI investment to approach $100 billion in the US and $200 billion globally by 2025. Over the long term, AI-related investments could further increase to 1.5 to 2.5% of GDP in developed countries.

Every business is now certain that Gen AI will transform their operations, but far fewer know exactly how that will happen. Market dynamics shift fast due to technological advances and emerging regulations. However, our team is fairly certain that several generative AI trends will remain prominent for the next two to three years.

6 Generative AI Trends to Capitalize On

Source: Sopra Steria Next

The generative AI market is at its liminal moment, with first movers like OpenAI, Microsoft, and Anthropic capitalizing on the growth momentum. But larger opportunities are yet to come.

Enterprises are expected to spend $40 billion on Gen AI in 2024 and over $150 billion by 2027, according to IDC. Top spenders are financial services companies (25%-30%), healthcare (15%-20%), consumer goods and retail (15%-20%), and media and entertainment (10%-15%).

In terms of use cases, businesses are the most likely to deploy Gen AI for marketing, sales, product development, and IT functions. McKinsey reports that adoption rates have more than doubled in a year for marketing and sales, and 50% of enterprises have adopted AI in two or more business functions, up from less than a third in 2023.

What we see today is the emergence of a new market, driven by both Gen AI infrastructure providers, application developers, and service companies — and we expect the next six trends to shape it over the next two to three years.

The Rise of Fine-Tuned LLMs

Open-source large language models (LLMs) like GPT, LLAMA, and BERT offer new opportunities for streamlining knowledge management tasks. But most users will tell you that most tools are good for high-level tasks and lack specialized knowledge.

Coding copilots can reduce time spent on routine tasks like code documentation or simple code generation by 35% to 50%. However, time savings are minimal for high-complexity tasks or those where developers lack knowledge of the technology. Likewise, benchmarks of open-source LLMs for code refactoring tasks showed that 30% of the time AI models fail to improve the code. In some cases, the tools also changed the external behavior of the code in subtle but critical ways.

Moreover, the usage of public Gen AI tools has raised valid security concerns. Sensitive data can be leaked to open-source models and stored indefinitely or used for other model training, violating privacy laws. Commercial Gen AI tools have guardrails for data training but are prone to direct adversarial attacks. Hackers exploit techniques like prompt injections, model extraction, and dataset poisoning to tamper with the solutions.

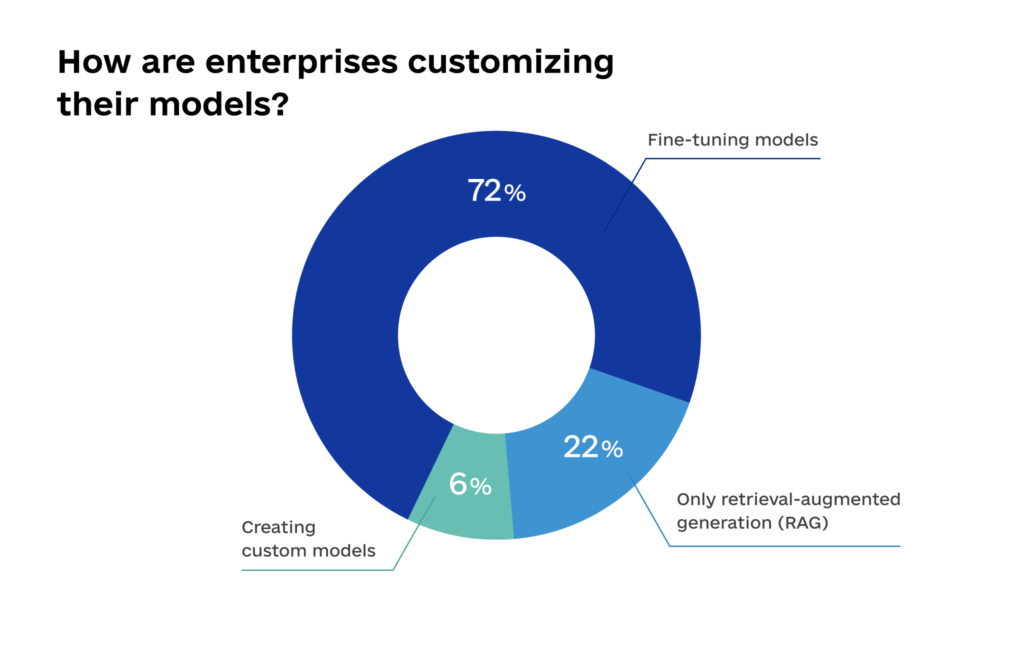

To address these shortcomings, enterprises are choosing to fine-tune open-source models and run them on private cloud instances.

Source: Andersson Horowitz

Fine-tuning adapts a pre-trained model to a specific task or domain through partial training. Model weights are updated based on new datasets, leveraging pre-training knowledge to improve performance.

For instance, the Low-Rank Adaptation (LoRA) technique inserts trainable low-rank matrices into specific model layers to tune performance on certain datasets without significant computational resources. This selective improvement enables quick model adaptation and performance gains.

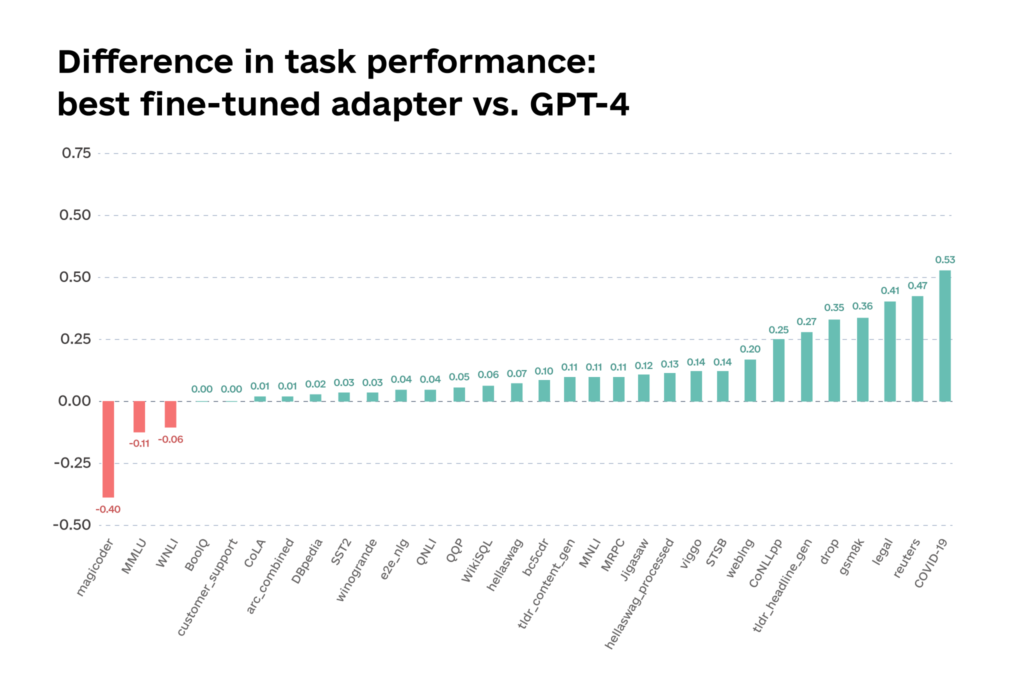

According to the PredictBase Fine-Tuning Index, fine-tuned models outperform open-source LLMs in tasks like legal contract review, medical text classification, or code generation using the corporate’s internal codebase.

Source: PredictBase

Still, the fine-tuning process isn’t without its challenges. Adopters must also create a process for model development, testing, and deployment (MLOps) and optimize their IT infrastructure for a fine-tuned LLM.

The new model has to be integrated with other business systems to ensure access to domain-specific data. This isn’t a straightforward process, with roughly 90% of the fine-tuning efforts being related to data management.

Gen AI Applications Will Focus on Differentiation

2024, so far, has been generous for new LLM releases.

GPT-4 Turbo has an updated knowledge cutoff of April 2023 and enhanced data processing. The Claude 3 model family (Haiku, Sonnet, and Opus) outperformed GPT in cognitive tasks like math problem-solving and knowledge Q&A. Google released Gemini 1.5 with experimental long-context understanding features. Stability AI launched Stable Diffusion XL Turbo with real-time image creation and editing capabilities.

API-based access to more capable, scalable, and cost-efficient LLMs allows for more fine-tuning and new product development. Yet Gen AI’s open-source nature also intensifies market competition.

Many vendors in the Gen AI space simply offer a user interface to an open-source model or a lightly fine-tuned version with marginal performance improvements. New Gen AI products must have a tech edge over others and a unique value proposition to stand out.

We believe that the apps that innovate beyond the “LLM + UI ” formula and significantly rethink the underlying workflows of enterprises or help enterprises better use their own proprietary data stand to perform especially well in this market.

Andersson Horowitz

Enterra Solutions differentiates by combining a proprietary Autonomous Decision ScienceTM (ADS®) algorithm with generative AI capabilities to support enterprise data processing operations. Enterra’s platform consists of interconnected business applications with a shared analysis, optimization, and human-like decision-making layer. By consolidating and operationalizing data from a multitude of connected applications, enterprises gain a 360-degree view of their operations.

Amira Learning, in turn, developed a custom algorithm for its intelligent reading tutor using results from over 100 studies and field trials. By combining human expertise with technological advances, Amira built an app as effective as a human tutor and more effective with English Language Learners.

Additionally, Gen AI product companies can differentiate by offering easier deployment, flexible hosting models (cloud vs on-premises), and tighter integration with the existing enterprise application stack.

The Most Promising Gen AI Opportunities Will Be In Automating Semi-Structured Tasks

Gen AI tools already proved their chops with simple, linear tasks like writing, image generation, or coding. But there’s also a huge vista of opportunities for automating processes that need human judgment, which were previously beyond deterministic AI reach.

Such judgment-based tasks include insurance claims processing, legal document reviews, medical billing, customer sentiment analysis, and loads of other operational processes. The latest advances in Gen AI make this type of cognitive automation possible and profitable.

Source: Sopra Steria Next

For instance, RobinAI speeds up legal report generation, review, and analysis by 5X. Developed with Cambridge’s Investment Management team, the AI copilot for lawyers combines Anthropic’s Claude LLM with proprietary contract data and machine learning to automate tasks with high precision.

Maven built an artificial general intelligence (AGI) platform to automate customer center operations. Unlike regular customer support chatbots, Maven’s AI agents use a fine-tuned GPT-4 model connected to an enterprise-grade search engine. The system parses millions of knowledge articles to generate an answer, and a scoring system cross-checks the output against several documents to ensure accuracy before providing a user output in 50 languages. With this architecture, Maven AGI can resolve 93% of customer inquiries autonomously.

Building cognitive solutions for vertical use cases, however, comes with unique hurdles.

For one, companies will need access to high-quality, variable training datasets for fine-tuning, which may be hard to obtain in regulated industries. In many cases, a robust human quality review system is necessary. For example, Robin AI has in-house legal experts to validate model outputs.

Secondly, there’s the issue of data security. Plug-and-play gen AI apps need access to corporate data sources for accurate response generation. Organizations with high data silos and outdated processing architectures will need to improve data management strategies and supporting infrastructure before integrating Gen AI products. This leads us to the next point…

Gen AI Adoption Will Trigger IT Infrastructure Modernization

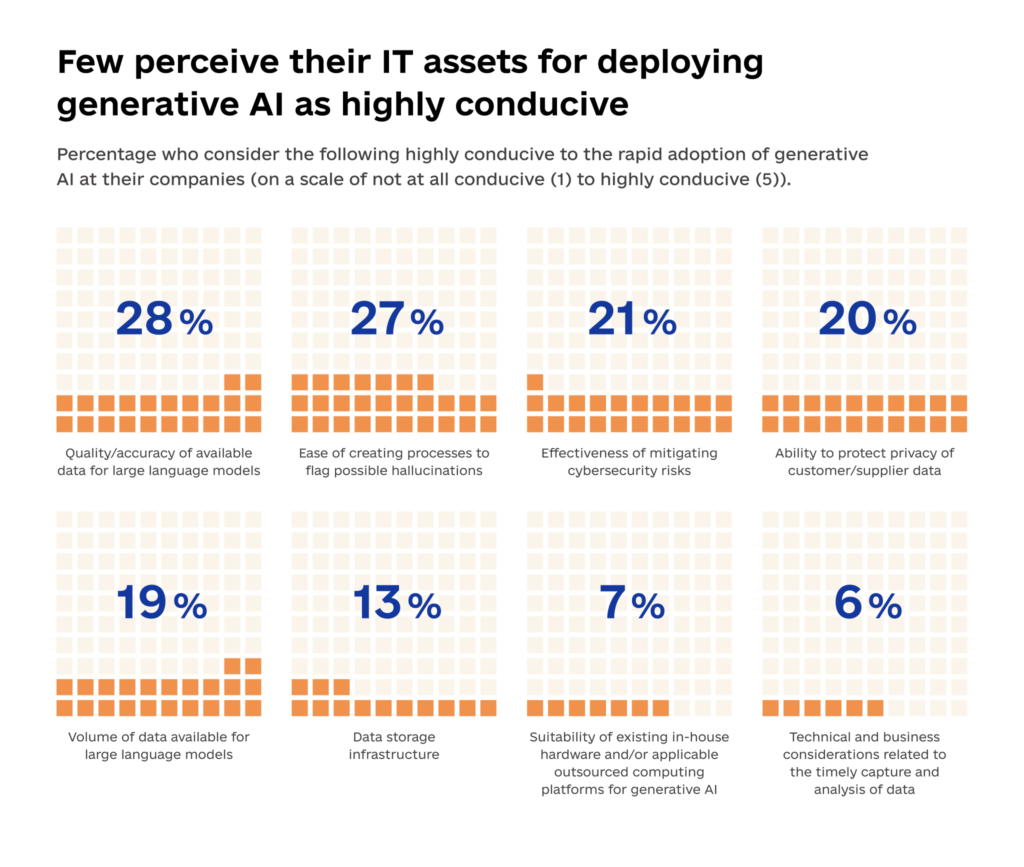

Ambition is high among enterprises, but adoption is hard. An MIT survey found that 76% of enterprises experimented with generative AI in 2023, but only 9% adopted the technology widely.

Legacy systems and subpar IT processes stall Gen AI adoption. In the same study, fewer than a third of respondents rated each of the eight IT attributes as conducive to rapid AI adoption. In particular, businesses lack capabilities in the areas of data quality/accuracy assessments, model hallucination identification, cyber risks mitigation, data privacy protection, large data volume processing, data storage infrastructure, computing infrastructure, and real-time data capture and analysis.

Source: MIT Technology Review

What’s even more curious is that companies who have already experimented with Gen AI deployments have less confidence in their IT infrastructure than their peers. In other words, Many leaders underestimate the degree of groundwork required to prepare for Gen AI adoption.

At 8allocate, we always recommend starting with an assessment to understand the state of your IT infrastructure better. Our consulting team helps determine the critical areas of improvement, optimization, or incremental modernization at every layer — from hardware and network configurations to cloud services and data management.

Effectively, we help you create a more conducive environment for developing and deploying Gen AI solutions by adding the missing guardrails and capabilities. These may include optimizing your data storage systems (data cloud lake and warehousing), introducing real-time streaming pipelines to accelerate fresh data delivery to the models, adopting metadata management tools, and developing better governance practices for data management and analytics.

Gen AI applications are latency-sensitive, so finding the best architecture for storing, analyzing, and interconnecting large data sets and business logic is challenging but possible with an experienced technology services partner.

Gen AI Services Market is Emerging

Gen AI solutions require not just new technology infrastructure but also people with unique skills. Finding AI talent, however, proves to be as feasible as debugging a software app with zero documentation.

Almost 70% of businesses struggle to attract enough talent to manage AI-based systems. The shortages are unlikely to end soon. IDC expects over 90% of companies globally will continue to experience IT skills shortages by 2026, leading to $5.5 trillion in losses due to product delays, impaired competitiveness, and loss of new business.

To gain the missing competencies, leaders are increasingly looking outward — towards the emerging market of Gen AI tech services providers.

Emerging market for services relating to gen AI/AI could be worth more than $200 billion by 2029.

McKinsey

Tech services providers, specializing in AI/ML implementation, can help businesses address their lack of experience in developing and deploying Gen AI solutions. Apart from addressing the gen AI talent gap, external providers can bring cross-functional expertise in building AI solutions for different domains (e.g., FinTech or ESG) and deliver process guidance, for example, in the areas of AI security or machine learning operations (MLOps).

As studies have shown, few companies are at a high stage of AI maturity. Substantial gaps remain in data management readiness and process maturity for deploying POCs and assessing their impacts on business operations. Technology services providers can assist customers with the necessary foundational transformations and speed up the adoption of Gen AI without sacrifices in security or compliance — the two growing areas of importance.

AI Governance Will Soon Become Mandatory

Although 75% of CEOs say that trusted AI is impossible without effective AI governance, only 39% have sufficient Gen AI governance in place today, according to an IBM survey.

That’s problematic as emerging AI regulations call for precise control over data quality, system transparency, robustness, and security.

The EU Council greenlighted the AI Act in May 2024, which established a ‘risk-based’ approach to regulating private and public AI systems on the market. Over 400 AI-related bills are at different stages of the discussion across 44 states in the US, as well as the federal Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence. Canada is also working on a federal Artificial Intelligence and Data Act (AIDA), while the UK and Australia both introduced voluntary AI guidelines.

Generally, policymakers adopt a risk-based approach to regulating AI, which level-sets the applicable regulations with the intended use of an AI system, along with its risk profile. This is the case of the EU AI Act and Canada’s AIDA.

Yet, some countries are also taking a dual approach, issuing both cross-sectoral and sector-specific compliance requirements. The cross-sectoral provisions establish a baseline framework with which all AI systems can comply. However, extra guidelines may also apply to businesses using AI in specific sectors and/or for specific use cases. Singapore’s Model AI Governance Framework, for example, provides general guidelines for the ethical use of AI for all businesses. However, financial companies must also comply with an extra regulation on Fairness, Ethics, Accountability, and Transparency (FEAT) in the Use of AI and Data Analytics from the country’s Monetary Authority.

For businesses, the emerging regulatory landscape further aggravates the need for better auditability and explainability of AI systems, especially those intended for regulated industries like finance, education, or healthcare. Again, this requires prudence with AI system security and monitoring, as well as an effective data governance strategy for providing the necessary disclosures.

Conclusions

Generative AI became a major theme in boardroom discussions this year, but the real breakthroughs are still ahead. Aspirations for adoptions and Gen AI product development are high, but the maturity levels of people, processes, and infrastructure levels are still lagging.

Companies that will focus on improving their foundational AI capabilities before launching all efforts into gen AI solutions deployment will emerge as winners in the long term. Likewise, new market entrants that will invest in fine-tuning their own models and achieve better integrations with enterprise workflows will outpace those offering “wrapper” solutions over open-source LLMs.

As legal compliance becomes mandatory, AI governance, security, and explainability will also distinguish the leaders from the laggards. Capabilities assessments, combined with careful planning and targeted investments in the right area, will be key to long-term success.

Create and refine your Gen AI adoption strategy with 8allocate’s help. As part of our AI implementation service, we help global businesses create a conducive environment for deploying custom AI solutions and ensure their topmost accuracy and security. Contact us to learn more about our engagement process.

Frequently Asked Questions

Quick Guide to Common Questions

What are the key generative AI trends shaping 2024 and beyond?

The most impactful generative AI trends include the rise of fine-tuned LLMs, Gen AI applications shifting toward differentiation, automation of semi-structured tasks, IT infrastructure modernization, the emergence of Gen AI service providers, and growing AI governance requirements. These trends will define how enterprises adopt and scale AI technologies.

How will fine-tuned LLMs change the AI landscape?

Fine-tuned large language models (LLMs) provide higher accuracy, domain-specific knowledge, and better security compared to general-purpose models. Enterprises are increasingly customizing open-source models like GPT, LLAMA, and BERT for legal, financial, and healthcare applications to enhance performance while ensuring data privacy.

Why is AI infrastructure modernization critical for Gen AI adoption?

Many organizations lack real-time data processing, scalable cloud infrastructure, and robust cybersecurity—all essential for deploying Gen AI at scale. Without modern IT environments, AI models suffer from slow processing, security vulnerabilities, and data inconsistencies, delaying enterprise adoption.

How will AI governance impact businesses using Gen AI?

AI governance is becoming mandatory, with regulations like the EU AI Act and emerging US federal guidelines enforcing risk-based compliance. Businesses must ensure AI security, bias mitigation, model transparency, and auditability to remain compliant and trustworthy.

How does 8allocate help businesses implement generative AI solutions?

8allocate specializes in AI implementation, fine-tuned model development, and AI-driven business optimization. We help organizations modernize IT infrastructure, secure AI systems, and integrate Gen AI into business workflows—ensuring scalable, high-impact AI adoption.