Whether you look at your bank account balance or scroll through Netflix suggestions, you’re interacting with analytics. Or more precisely: its outputs — the insights.

Yet, far fewer people think about how data analytics systems work and what makes them useful, valuable, and effective. This post provides a lowdown of the key components of data and analytics systems, their value for businesses, and the growth opportunities they create.

What is Data and Analytics (D&A)?

According to Gartner:

Data and analytics (D&A) refers to the ways organizations manage data to support all its uses and analyze data to improve decisions, business processes, and outcomes, such as discovering new business risks, challenges, and opportunities.

In other words: data and analytics supply businesses with operational intelligence for making better decisions across functions. For example, Target attributes a 25% increase in product restock speeds to a new predictive inventory positioning system and a data-driven sortation model.

D&A also helps identify trends and opportunities businesses haven’t yet considered and power innovative customer-facing products or services. Nike, in turn, grew the volume of direct-to-consumer online sales by 16% over the last year with advanced customer analytics, harnessing data for its digital channels.

The main value of data lies in analysis (aka insights), delivered by the adopted analytical tools and models. Without the application of analytics techniques, data holds little value.

Popular types of data analytics techniques include:

- Descriptive analytics performs analysis of historical data to identify correlations in past events.

- Diagnostic analytics drills down into the causes of specific outcomes and problems through analysis of available data.

- Predictive analytics relies on historical data to forecast future trends and model different outcomes.

- Prescriptive analytics suggests the optimal path forward to achieving specific goals or outcomes.

The above are examples of both traditional analytics and data science.

Data Analytics vs. Data Science: What’s the Difference?

Before we go forward, let’s settle the differences between these two terms. Both data analytics and data science deal with data, but each encompasses different processes.

- Data analytics is the process of transforming available data into actionable insights: visualizations, dashboards, summaries, and forecasts.

- Data science, in turn, is a set of approaches for designing and developing new methods and tools for data collection, transformation, analysis, and modeling.

Data analytics primarily focuses on organizing historical data to inform decisions in the present. By contrast, data science involves interpreting data to identify correlations for making predictions and forecasts.

Here’s a side-by-side comparison of data analytics vs. data science for easier understanding:

The Business Value of Data Analytics

Data in its raw state has limited value. It’s the application of analytics that makes it useful. Analytics helps discover the quantitative and qualitative metrics businesses can use for informed decision-making.

Generally, data analytics generates business value in the following areas:

- Process management optimization

- Strategic decision-making

- New product development

- Opportunity evaluations

- Better marketing and personalization

- Competitive analysis

- Revenue maximization

- Business model transformations

According to BARC’s Data Culture Survey 2023, 49% of respondents have already achieved substantial improvements in decision-making thanks to their investments. And another 37% are making continuous process improvements based on data.

Take it from Amazon. The company uses serverless data analytics to maximize the performance of its global logistics network, which moves billions of packages monthly across the globe. The company also heavily relies on big data to optimize its assortment, based on customer behaviors and purchasing trends to maintain high growth of its e-commerce operations.

McDonald’s, in turn, is working on the deployment of Automated Order Taking (AOT) technology across their chains. The new solution will not only improve the ordering experience but also help the company build a bigger database of customer insights.

Overall, data analytics can bring value to almost every function. So no wonder that organizations make good progress on this. According to the latest NewVantage Partners annual survey, 92% of surveyed organizations have delivered measurable business value from data and analytics investment and made substantial progress across the key data management initiatives in 2023.

Source: New Vantage Partners

Data and Analytics Trends for 2023 and Onward

Ongoing digitization, coupled with self-service business intelligence (BI) tools, has democratized access to analytics.

Investments in D&A also remain strong despite (or even due to) ongoing economic uncertainty. In 2023, business intelligence and data analytics were selected as the top areas of increased investment by 55% of global CIOs, with most interest concentrated on the following six macro trends in the D&A market.

Democratized Access to Analytics

Businesses have been bringing analytics to more functions. Over the five years, 92% of companies say that their usage of BI/analytics tools has substantially increased. Much of this growth is fostered by self-service business intelligence BI tools.

A self-service BI tool provides a user-friendly interface and pre-built templates for report creation, data visualization, and ad-hoc analysis. “Self-service” means that business users don’t need the help of data analysts or the IT department for data modeling tasks. Instead, they can easily access pre-made datasets or pull custom data via connectors to corporate databases, cloud storage systems, or SaaS applications. Afterward, users can apply

no-code tools for data cleaning, transformation, and modeling. For example, Power BI from Microsoft offers 30 different ‘out of the box’ report models.

Self-service BI democratizes access to data by enabling anyone to perform custom data analysis with speed and ease. For example, PwC Banking can now deploy new customer-facing analytics solutions in 4-6 weeks, using Azure data management architecture and Power BI platform.

That said, to benefit from self-service analytics, business leaders will need a strong data management strategy, promoting greater data accessibility, mobility, and security. Without an effective architecture on the backend, self-service BI tools have bounded value.

Growing Adoption of Real-Time Data Analytics

Real-time analytics means applying analytics to data as soon as it enters the system i.e., there’s no wait time to report creation.

Traditional analytics system often operates on a scheduled batch processing schedule, meaning that new data gets uploaded to the warehousing system at pre-programmed intervals. Then processed for analysis.

In contrast, real-time analytics systems operate on the edge — at a virtual location as close to the data’s origins as possible. Examples of real-time analytics deployment scenarios include:

- In-database — an analytics layer is embedded straight into the database management system.

- In-memory — analyzed data is stored in random-access memory (RAM) instead of physical disks for lower latency processing.

Real-time analytics can also be achieved with parallel programming — instructing the system to use multiple processors with individual OS and memory resources to perform part of the analysis in tandem with other systems. In each case, the goal is to achieve the minimum possible latency for the analytics apps.

Such real-time solutions are actively explored in the healthcare sector for aggregating data from connected medical devices and systems. The automotive sector also leverages real-time analytics to power the new generation of ADAS systems.

As of 2022, 97% of companies with mature data management processes use streaming data at least to some extent, according to Confluent. The majority of adopters attribute a 10%+ increase in annual revenue growth to their investment.

Composable Analytics Architectures

Big data analytics is a $272 billion market, shared between infrastructure providers, cloud storage companies; SaaS and PaaS analytics platforms. On top, there’s a growing number of open-source analytics solutions.

This means business leaders have an ample choice of tools to create composable architectures. New analytics projects and products can be built with pre-made, reusable, stackable, and re-configurable components, which can easily be adapted to new purposes.

Technologically, composability is achieved with microservices-based architecture. Instead of using a monolith analytics stack, organizations develop bounded, loosely coupled analytics components. Because such analytics components can be connected in different ways and reused multiple times, you can create new analytics experiences faster.

Instead of building new solutions from scratch, your teams can create new analytics apps from pre-made business capabilities (e.g., standard interface elements, pre-made data pipelines, open APIs). Low-code and no-code platforms can be also easily stacked on top to promote extra analytics use cases.

The Rise of Augmented Analytics

Augmented analytics assumes the usage of emerging technologies like machine learning and AI to assist with routine data analytics tasks such as data preparation, report generation, and analysis interpretation.

The goal of augmented analytics is two-fold:

- Automate time-intensive aspects of data science and analytics model development, management, and deployment.

- Improve the experience of business users and citizen developers, working with BI and analytics platforms.

By adding AI capabilities to existing analytics solutions, business leaders can overcome the common challenges of:

- Large data volumes and multiple standards. ML algorithms can perform exploratory analysis to resurface new data patterns, highlight changes in trends, and detect anomalies (or inconsistencies) in available data.

- Complex data querying process. Thanks to natural language processing (NLP), augmented analytics solutions can handle written and spoken queries and provide users with conversational answers about the analyzed data.

- Labor-intensive data processing and transformations involve analyzing raw data. AI-driven solutions can elastically scale to operationalize more data sources and effectively transform raw data into an analytics-ready state.

In short, augmented analytics tools can help your teams engage with data through conversational interactions, analyze information from new insights, and generate insights faster thanks to greater data accessibility.

Increased Usage of Synthetic Data

Synthetic data are computer-generated datasets, used for training machine learning (ML) and deep learning (DL) models.

To learn, AI algorithms must consume vast amounts of data — much of which can be unavailable, privacy-breaching, or expensive to obtain. For example, to train a self-driving vehicle algorithm, you’ll need a lot of data from accidents and crashes. Obtaining it in real life can be downright dangerous. Similarly, public or private training datasets may not always have optimal representation (e.g., they mostly contain data from one demographic).

Synthetic data enables AI developers to test the produced modes on mock data, and then use real data when they get access to it in the future. Moreover, scientists agree that synthetic data could be better than real-world datasets for certain AI use cases, especially in sensitive industries like healthcare or finance.

In fact, the US Census Bureau plans to use synthetic data to enhance the privacy of the annual American Community Survey respondents. Administrative Data Research UK, plans to do the same for data, collected by the Office of National Statistics and the UK Data Service. Both projects will allow private companies to access new data sets, based on close-to-real-life consumer data.

Overall, Gartner predicts that by 2024, as much as 60% of data for AI will be synthetic, up from just 1% in 2021. This shift will also accelerate the development of new AI models.

Focus on DataOps

Businesses have major data reserves and a growing appetite for accessible analytics. What many lack is effective processes for analyzing raw data and transforming it into insights at cruising speeds.

Enter DataOps — a new methodology for improving the time-to-market for data analytics solutions. Similar to DevOps, DataOps focuses on improving the processes of pushing new data products into production, with a particular emphasis on analyzing raw data.

DataOps assumes the creation of streamlined,(semi-)automated processes for:

- Data access and collection

- Dataset creation and management

- Data delivery for new analytics apps

According to the latest estimates, the majority of companies (50%) spend between 8 and 90 days deploying one machine learning model (with 18% taking longer than 90 days). That’s on top of 6 to 18 months spent on model development.

The reasons for long analytics development cycles are ample — poor system architecture, siloed data pipelines, model reproducibility challenges, and lack of in-house resources.

The goal of DataOps is to create better processes for new analytics solution development, data accessibility for self-service BI tools, and custom model deployment. So that you could

increase time-to-market for new AI and analytics pilots.

Challenges of Implementing Data Analytics

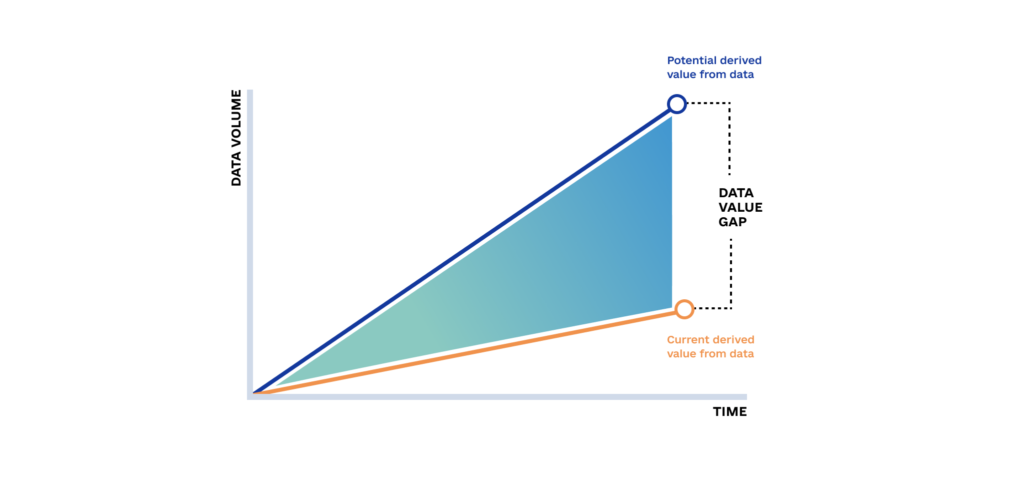

Although aspirations for becoming data-driven are high, the reality is more grounded. Only 32% of enterprises are realizing tangible benefits from their data.

Source: Accenture

To close the data value chasm, organizations will need to address the following challenges.

Questionable Data Quality

Data availability has increased tremendously, but its quality remains questionable. Over 40% of business leaders cite “lack of quality data” as the biggest barrier to BI and analytics tools adoption.

The common data quality issues include:

- Data entry errors

- Lack of data standardization

- Data silos

- No report certification processes

- Missing data quality rules and metrics

Although companies have ample data, they often struggle to maintain it in an analytics-ready state. The lack of shared master standards for data quality and formatting references results in siloed data storage. No automatic processes for remediating errors results in low data confidence. Outdated (or non-existent) data management flows, in turn, make it hard to coordinate changes in data state to the downstream system and users.

In fact, over 50% of businesses say that such data quality issues impact 25% or more of their revenue, which is a major increase from 2022.

Better data infrastructure and engineering practices, paired with a strong data governance policy, are essential for improving data quality.

Bad Data Management Processes

Rapid digitization should have led to increased productivity, but many teams often don’t see these results. Yet, data management has not kept pace with new technologies, resulting in enormous costs and friction.

Here’s a simple example: Your Sales manager wants to understand how many customers the company acquired last month. However, they struggle to get the right number because Sales gives credit to new customers when the deal is signed, while Finance gives credit when the first invoice is paid. As a result, the records in the CRM and Finance systems don’t add up and the manager can’t use an available self-service BI tool for analysis. Instead, they rely on guesswork and approximation to evaluate the actual state of affairs.

Oftentimes, companies have the technical means to analyze data but don’t have effective processes for creating reliable datasets and circulating insights internally. Because of that, organizations only capture 10 to 20 dollars of every 100 dollars of growth opportunities resting within the available data.

If that sounds like your case, reach out to our big data analytics experts. Our team can analyze the current state of your data infrastructure and help implement more effective architecture for data and analytics.

Privacy and Compliance Requirements

A number of global privacy laws restrict businesses in their ability to collect, store, and use different types of data, especially personally identifiable information (PII), in data analytics programs. Some records must be anonymized or excluded from processing, which limits your ability to get reliable data.

Failure to comply with such regulations can result in major fines. Sephora recently settled a $1.2m lawsuit over a California Consumer Privacy Act (CCPA) violation because it allegedly provided user data to third parties via tracking technologies without properly informing the customers. Almost every Big Tech company, in turn, has been the target of GDPR fines from European privacy watchdogs at some point

That said, data analytics and customer privacy are not mutually exclusive. What the regulations are pushing for is more ethical, secure, and auditable data collection and processing.

In practice, privacy-friendly analytics means building an effective data transformation process to ensure proper anonymization and data masking. It also assumes the usage of software engineering frameworks like privacy by design approach and applicable ISO standards.

8allocate, for example, helped develop an advanced data platform with AI capabilities, which is now used by global banks. The solution has air-tight security and privacy-centered data processing. Contact us for a full case study.

Final Thoughts

Without a doubt, strategic investments in data and analytics can bring major ROI. The more data you can analyze, the more internal processes and external products you can improve. Such day-to-day advances eventually build up to long-term competitiveness and innovation.

Yet, analytics programs also require meticulous planning and high-precision execution. User-facing analytics solutions only bring value when they’re backed by effective, scalable, high-concurrency data processing infrastructure — and that’s the area where 8allocate can help.

How 8allocate Can Help You Harness the Power of Data Analytics

8allocate helps global businesses launch robust, scalable, privacy-centered analytics solutions. We offer end-to-end support: from initial infrastructure assessments and solutions discovery to managed analytics system implementation and maintenance. Our data analysts are well-versed in the analysis of various data types.

Our key areas of big data analytics expertise:

- Data Collection: Our data analysts implement custom data source integrations and robust data transformation pipelines.

- Statistical analysis system: Get reliable insights for strategic decision-making, based on historical trends.

- Predictive analytics solutions: Forecast future outcomes with high confidence to operate in lock-step with market changes.

- Self-service BI and data visualization: Empower business users with easily accessible information, custom dashboards, and reports.

- Data quality assurance: Maintain the highest standards of data integrity, accuracy, and freshness with streamlined quality checks.

Contact us to receive a personalized presentation on our data analytics capabilities, service lines, and successfully delivered projects.

Frequently Asked Questions

Quick Guide to Common Questions

What is data and analytics (D&A)?

Data and analytics (D&A) involve managing, analyzing, and using data to enhance decision-making and improve business outcomes. Companies use analytics to discover risks, trends, and opportunities that drive operational efficiency and innovation.

What’s the difference between data analytics and data science?

Data analytics transforms data into actionable insights, focusing on past trends and performance. Data science, on the other hand, develops new methods for data collection, modeling, and prediction, enabling AI-driven solutions and forecasting.

How does data analytics create business value?

Data analytics optimizes processes, informs strategic decisions, enhances marketing personalization, drives revenue growth, and supports competitive analysis. Companies like Amazon and McDonald’s leverage analytics for supply chain efficiency and customer insights.

What are the latest trends in data and analytics?

Key trends shaping data analytics include:

- Democratized analytics: Self-service BI tools empower business users to generate insights independently.

- Real-time data analytics: Immediate insights improve decision-making in logistics, finance, and healthcare.

- Composable architectures: Microservices-based systems enable flexible and scalable analytics solutions.

- Augmented analytics: AI-driven automation enhances data preparation, interpretation, and decision support.

- Synthetic data usage: AI-generated data improves model training while maintaining privacy compliance.

- DataOps adoption: Streamlining data pipelines accelerates analytics deployment and reliability.

What are the biggest challenges in implementing data analytics?

- Poor data quality: Inconsistent, siloed, or incomplete data affects decision-making.

- Inefficient data management: Disconnected processes and lack of governance slow down insights generation.

- Privacy & compliance: Regulations like GDPR and CCPA require secure and ethical data usage.