Artificial intelligence (AI) is entering the era of mainstream. But like any emerging technology, AI carries both benefits and risks.

Over 80% of users are worried about the security risks posed by generative AI apps (like ChatGPT). Model cybersecurity, user data privacy, and ethical considerations, in particular, are among common concerns.

This post explains how to build secure AI systems by addressing potential vulnerabilities at three levels: Data, development, and model operations.

The Three Pillars of AI Security

Over half of global businesses don’t have appropriate deference strategies against AI attacks despite being concerned about risk proliferation.

But most are looking to change that. Better AI trust, risk, and security management (AI TRiSM) is one of the major tech trends for 2024. This Gartner-proposed framework encapsulates different approaches business leaders take to build reliable, fair, and secure AI applications and reduce operational risks.

AITRiSM principles include better model monitoring and explainability, which allow leaders to understand better how the model performs in real-world settings, detect biases early on, and address possible security vulnerabilities. ModelOps, in turn, is a set of practices and technologies aimed at improving model governance and lifecycle management. Similar to DevOps, in software engineering, ModelOps brings greater predictability to model development through better training data management practices, model version control (to enable roll-back, re-training, and fine-tuning), extensive testing, and ongoing monitoring.

These two practices further build up a wider range of mechanisms for ensuring high AI application security and data privacy, as well as air-tight protection against the common AI security risks through AI data governance.

AI Data Security

Machine learning and deep learning require ample, variable, and vetted datasets for model training validation and retraining. Training datasets must also be representative to prevent model biases, but properly pre-cleansed and anonymized to ensure compliance with global privacy laws, which not all businesses do.

Haphazard AI data storage practices and lack of security for data in transit can also lead to data theft or data poisoning — another two major risks.

Risk: Privacy and Copyright Breaches

Generally, you need 10X more data than model training parameters to develop a reliable AI model. However, global privacy laws limit AI developers’ ability to use customer data for model training.

Still, many developers, knowingly or not, train models on personally identifiable information (PII), obtained in bypass of existing laws. Spotify had to settle a $5.4 million GDPR fine because it didn’t provide proper disclosures on how data it collected on users was used by its machine learning model. OpenAI also received one class action suit for using allegedly stolen information of Internet users without their knowledge or consent for model training. And another one from the New York Times for stealing its copyrighted content (with more likely to come soon).

Newly emerging AI regulations like the EU AI Act and the AI Regulation Bill from the UK government plan to implement more stringent regulations to ensure appropriate levels of data confidentiality and limit AI developers’ ability to inject user data without their explicit consent and acknowledgment.

Solution: Data Minimization and Data Anonymization

Without robust anonymization, sensitive data can become part of the model’s training dataset, which poses two types of risks:

- Legal and compliance if your organization doesn’t provide subsequent privacy protection.

- Cybersecurity: Threat actors can tamper with the model to reconstruct its training data and perform data theft.

To address both, use appropriate privacy-preserving techniques like homomorphic encryption (HE), differential privacy, secure multiparty computation (SMPC), or federated learning techniques as part of AI and ML implementation and integration.

Sony, for example, created a specialized privacy toolbox that combines several methods for ensuring high data security and privacy. Users can automatically remove sensitive elements from training datasets, opt to use privacy-preserving training algorithms such as DP-SGD, and benchmark how the model can resist known attack techniques like model inversion or membership inference attacks (MIA).

Source: Sony

Risk: Data Poisoning

Cyber-exploits such as data poisoning aim to introduce biases, errors, or other vulnerabilities into the model training data. On a smaller scale, model data poisoning leads to concept drift. For example, a computer vision system starts classifying Ford cars as Ferraris.

On a larger scale, such cases can render the system fully inefficient. A cybersecurity firm recently managed to gain access to 723 accounts with exposed HuggingFace API tokens, held by companies like Meta, Microsoft, Google, and VMware among others. This vulnerability effectively allowed the team to have full control over the training data repositories, which opened a direct path to data poisoning attacks.

Solution: Data Validation and Ongoing Model Monitoring

OWASP AI Security and Privacy Guide provides several must-follow practices to prevent data poisoning in AI systems:

- Implement automatic data quality checks and privacy controls into data ingestion pipelines to validate the accuracy of incoming data.

- Add multiple layers of protection to training data storage: encryption, firewalls, and secure data transfer protocols, especially when integrating AI into existing platforms.

- Implement advanced model monitoring to detect anomalies in model performance and abnormalities in training data (e.g., odd data labels or sudden changes in the data distribution patterns).

Another helpful practice top AI teams use is training models on different subsets of training data (when possible). Then use an ensemble of these models to make predictions. By doing so, data poisoning attacks can be minimized since the attacker would need to compromise multiple models.

Secure Model Development

Vulnerabilities, in regular software and AI models, can be acquired or created. Poor code or overlooked system dependencies can create security flaws in your model, an attacker could explore. Furthermore, acquired components, such as open-source libraries or pre-trained models, may contain inherent flaws that put your company at risk.

Risk: Compromised Model Components

New AI developer tools, libraries, frameworks, platforms, and other application components emerge every day. Commoditized access to open-source and commercial solutions massively accelerated the speed of new model development. However, greater reliance on third-party technologies also increases risk exposure.

Cyber researchers have demonstrated how a tampered version of a machine learning model can be used to distribute malware in an organization. Researchers conducted a test with the popular PyTorch framework. But the same attack mechanics can be adapted to target other popular ML libraries like TensorFlow, scikit-learn, and Keras. In other words, companies that take pre-trained machine learning models from public repositories like HuggingFace or TensorFlow Hub are at risk of bringing cyber threats.

Solution: Audit Your Toolchain

Make sure you procure all hardware and software for AI application development from reputable sources. This includes data, software libraries, middleware, external APIs, on-premises, and cloud infrastructure. NCSC provides extensive recommendations for improving supply chain governance for technology acquisitions (including AI systems).

Also, evaluate all pre-trained models for signs of tampering or hijacking. Modern security tools can scan model codes for evidence of abuse (for example, malicious code stored within the model learning files).

As a general principle, apply secure software development practices to AI models and use existing standards for application security controls and operational security — e.g., ISO/IEC 27090 AI infosec or the more general OWASP Application Security Verification Standard (ASVS).

Risk: Subpar Model Performance

Generative AI applications require substantial effort to develop. But on average, companies shelve over 80% of machine learning models even before deploying them to production. Yet, even a deployed model may not bring the expected “dividends”.

By the time a trained model enters production, its efficiency may be lower than expected because the real-world data differs a lot from the training one. The Sophos Group demonstrated how well-performing malware detection models degraded within a few weeks in production. So some teams may be tempted to rush the model release and cut some corners at the development or testing stages.

Obviously, this undermines model security and creates avoidable technical debt, which then leads to higher operating costs. Cloud infrastructure and/or local data center costs for large AI models can easily reach seven figures per month, especially as your user base grows.

Solution: Pay Attention to Technical Debt

Low model performance and high operating costs are often the result of subpar development decisions in favor of short-term results, but at the expense of long-term benefits. Google team outlined several factors that accelerate technical debt in machine learning models:

- Entanglements in machine learning packages. When the model mixes data sources, it’s extremely difficult to make isolated model improvements (e.g., optimize individual hyperparameters).

- Hidden feedback loops indicate false correlations in data, leading to biased model outputs.

- Undeclared consumers, aka applications and other systems, use the output of a model as an input to another component of the system without the developers’ knowledge.

- A wide range of data dependency issues such as underutilized packages, available, but mostly unneeded for the model.

…And the more familiar issues, driving technical debt in other software systems, like glue code, data pipeline jungles, and configuration debt.

By acknowledging and addressing accumulated technical debt in AI applications, developers can reduce the system’s operating costs and improve its performance. Likewise, by establishing strong ModelOps practices of continuous integration, model versioning, and automated model re-training, among others, AI teams can release more robust and secure models faster but without compromising on quality.

Secure Model Operations

Productized AI is the ultimate destination for many businesses. However, exposure to real-world conditions and external users also means increased risk exposure.

Hackers can stage direct adversarial attacks to tamper with the model performance (e.g., to disclose sensitive training data, compromise its outputs, or use the model interface to gain access to other enterprise systems). A group of security researchers already showed how hackers could potentially use the GPT model to deliver malicious code packages into developers’ environments. All of this calls for appropriate AI model security measures in production environments.

Risk: Data leakage and disclosures

User-facing AI models must have appropriate security mechanisms to prevent:

- Disclosure of user inputs (e.g., prompts, input data values, etc).

- Disclosure of model outputs to any unauthorized third parties

Forcing a model to disclose either of the above is easier than you may think. Before that, researchers from the Google Brain project, also demonstrated that with a bit of fiddling, you can access data, saved in a generative model memory. In their case, it was a fake social security number.

Accidental data leakage also happens quite often, mostly due to technical misconfiguration. OpenAI had to deal with its first data leak in March 2023 when paid users’ names, email addresses, and credit card numbers (the last four digits only) became publicly accessible for a brief period due to a system glitch.

Solution: Strong data management practices

To prevent accidental or malicious data leakages implement strong data management practices.

Implement robust access and identity management (IAM) controls to ensure that only authorized users (and applications) have access to the model data. Use a model version control system (VCS) to keep a better track of model variants and prevent releases of models trained on sensitive data. Apply encryption to both data in rest and in transit to minimize the odds of breaches. For generative AI models, implement input sanitization mechanisms to prevent certain characters or data types (associated with prompt injection) from being entered into the system.

To secure its AI products for finance, JPMorgan established a new AIgoCRYPT Center of Excellence (CoE) in 2022 — a unit, focused on designing, sharing, and implementing new techniques for secure computation of encrypted data. In particular, the team performs research into fully homomorphic encryption (FHE), privacy-preserving machine learning (PPML), privacy-preserving federated learning (PPFL), and zero-knowledge proofs (ZK) among other areas. A lot of the produced research is public.

Risk: Direct Adversarial Attacks

Evasion attacks are another common type of AI security risk. In such cases, attackers try to alter the model behavior to either tweak the model to act to their advantage or to compromise its performance. Researchers from Tencent demonstrated that adding small stickers in an intersection can compromise Tesla’s autopilot system to drive into the wrong lane.

Evasion attacks often rely on prompt injections — submission of malicious content into system inputs/prompts to manipulate its behavior. But they may be also staged in other ways by leveraging vulnerabilities in AI application design e.g., unprotected APIs, weak access controls, etc.

Apart from causing performance issues, hackers may also want to nick the entire AI model. This can be a brute force attack on a private code repository or phishing attacks, aimed at users of MLaaS providers.

Solution: Enforce Strong Security Policies

Asset management is the pillar of strong cybersecurity. You should have a clear view of where all the model assets reside and the ability to track, authenticate, version control, and restore them in case of a compromise.

Similarly, enforce strong data management principles. Use the principle of the least privilege when it comes to accessing model training data. Implement different degrees of security, based on the type of data and content the AI systems interact with.

To communicate model security to downstream users, you can also implement cryptographic model signing, which ensures a certain level of trust and can be verified by other users.

Risk: Model Drift

Model drift is a progressive decade of machine learning’s predictive capabilities. It mostly happens when the conditions in the analyzed conditions have changed, but the model lacks the knowledge to properly process these. For example, a mortgage risk prediction model, trained on houses under $1 million loses its accuracy when prices increase.

In other words: the model loses its accuracy and this can lead to some major consequences. Take it from Zillow, whose proprietary property valuation algorithm over-estimated the value of the houses the company purchased in Q3 and Q4 by over $500 million.

The two common types of model drift are:

- Data drift happens when the distribution of the input data changes over time. For example, housing prices change.

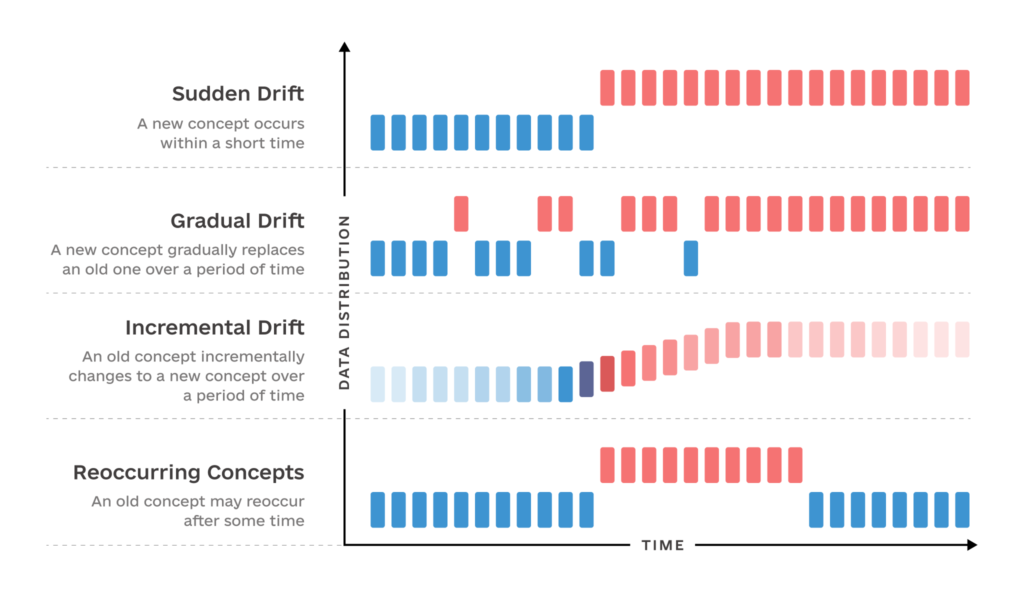

Concept drift happens when the task that the model was trained to do changes. For example, a new type of payment fraud has emerged and the model cannot detect it. Concept drift can be sudden, gradual, incremental, or reoccurring.

Source: Arxiv

In either case, drift is no good for businesses as it can create model biases, inaccuracies, or even render them fully ineffective.

Solution: Model Monitoring and Automatic Retraining

To ensure the model outputs remain accurate over time, implement automatic drift detection. Algorithms like Population Stability Index (PSI) and Kolmogorov-Smirnov (K-S) test among others are great at detecting data drift. Concept drift detection requires a combination of model performance metrics (e.g., recall, precision, F1-score, etc) to evaluate its behavior over time. Comprehensive monitoring helps detect both natural drift and signs of malicious intrusion.

As a next step, consider implementing automatic model retraining when the model performance dips below the acceptable threshold. Even a 1% improvement in model accuracy performance can lead to substantial benefits: Better operational insights, faster operating speeds, and higher revenue.

Conclusions

AI application security hinges on businesses’ ability to apply strong data management governance, secure application development principles, and robust security controls for deployed products.

By addressing security at three levels — data, development, and deployment, you can minimize the risks of dealing with both current and emerging threats. Use appropriate privacy-preserving techniques in combination with automatic data validation to protect data inputs and outputs. Be diligent about your AI development toolkit and system architecture to prevent the accumulation of technical debt and the proliferation of different vulnerabilities.

Implement proper security controls for access and identity management. Ensure comprehensive monitoring to detect signs of external tempering and natural decay in model performance. 8allocate AI and ML experts would be delighted to further advise you on the optimal AI security controls and best practices. Contact us to schedule an audit.

Frequently Asked Questions

Quick Guide to Common Questions

What are the most significant security risks in AI systems?

The most common risks include data privacy breaches, data poisoning, compromised model components, adversarial attacks, and model drift. These threats can compromise AI integrity, introduce biases, and expose sensitive information.

How can AI models be protected against data poisoning?

Implement data validation, anomaly detection, and strong encryption in data pipelines. Multiple models should be trained on different data subsets to reduce the impact of poisoned data.

What’s the best way to secure AI models from adversarial attacks?

Enforce strict access controls, API security, and prompt sanitization. Apply cryptographic model signing and use adversarial training techniques to strengthen model robustness.

How can companies prevent AI model drift?

Set up continuous model monitoring with automated drift detection algorithms (e.g., PSI, K-S test). Retrain models automatically when performance degrades to maintain accuracy and reliability.

How do businesses ensure AI data privacy and regulatory compliance?

Use data minimization, anonymization, and encryption techniques like homomorphic encryption and federated learning. Align AI governance with GDPR, AI Act, and other regulations.

What security measures should be in place when using third-party AI models?

Conduct regular audits of pre-trained models, scan for vulnerabilities, and verify model provenance. Follow secure development practices such as ISO/IEC 27090 and OWASP AI Security Guidelines.

How can organizations reduce AI technical debt?

Adopt ModelOps best practices, version control, and continuous integration to avoid hidden dependencies, bias accumulation, and scalability bottlenecks in AI systems.